Why actually hitting 144fps is so hard in most games

Higher framerates can be great, but getting there requires more than just hardware.

It's the Holy Grail of current gaming PCs: 144fps. You've splurged on a great gaming monitor, and paired it up with the fastest CPU and one of the best graphics cards money can buy. It's so smooth, so responsive, and you're ready to dominate your opponents with your superior skills—or at least your higher refresh rate. There's only one problem. Hitting 144fps (or more) in many games is difficult, and sometimes it's just flat out impossible. What gives?

It starts with a game's core design and features. Not to throw shade on consoles (whatever, I'm totally throwing shade on consoles), but when several of the current generation gaming platforms cannot output at higher than 60Hz, it's only natural that the games played on them don't go out of their way to exceed 60fps—or even 30fps in some cases. When a game developer starts from that perspective, it can be very difficult to correct down the line. We've seen games like Fallout 4 tie physics, movement speed, and more to framerate, often with undesirable results.

It's not just about targeting 30 or 60fps, however. Game complexity keeps increasing, and complexity means doing more calculations. Singleplayer games are typically a different experience than multiplayer games. The latter are inherently more competitive, which means higher fps can be more beneficial for the top players, and they often omit a bunch of things that can increase frametimes.

Think about games like Counter-Strike, Overwatch, PUBG, and Fortnite for example. There's very little in the way of AI or NPC logic that needs to happen. Most of the world is static and it's only the players running around, which means a lot less overhead and ultimately the potential for higher framerates.

Primarily singleplayer games are a different matter. Look at the environments of Assassin's Creed Odyssey, Monster Hunter World, and Hitman 2. There can be hundreds of creatures, NPCs, and other entities that need to be processed, each with different animations, sounds, and other effects. That can bog down even the fastest CPUs, where much of that processing occurs.

Yes, the CPU and not the GPU. While the GPU is often considered the bottleneck for gaming performance, it's mostly about selecting the appropriate resolution and graphics quality. Turn down the settings and/or resolution far enough and the CPU becomes the limiting factor. And in complex games, that CPU limit can easily fall below 144fps. While a fast graphics card is often necessary to hit 144fps, an equally fast CPU may also be required.

Assassin's Creed Odyssey can almost reach 144fps ... with an RTX 2080 Ti.

Hitman 2 slams into a CPU bottleneck of around 122fps.

Monster Hunter World also has difficulty getting above 120fps.

Look at the CPU benchmarks in Assassin's Creed, Monster Hunter, and Hitman. Running at 1080p and low or medium quality, there's excellent scaling in terms of CPU performance, but 144fps is still a difficult hurdle to clear. More importantly, the scaling comes mostly from clockspeed, with core and thread counts being less of a factor—especially moving beyond 6-core processors. That's because most games are still ruled by a single thread that does a lot of the work.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Flip things around and think about each frame in terms of milliseconds. For a steady 60fps, each frame has at most 16.7ms of graphics and processing time. Jump to 144fps and each frame only has 6.9ms in which to get everything completed. But how much time does it actually take for each part of rendering a current frame? The answer is that it varies, and this leads into a discussion of Amdahl's Law.

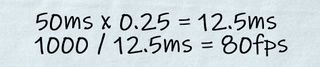

The gist of Amdahl's Law is that there are always portions of code that can't be parallelized. Imagine a hypothetical game where a single 4.0GHz Intel core takes 50ms to handle all of the calculations for each frame. That game would be limited to 20fps. If 75 percent of the game code can be split into subtasks that run concurrently, but 25 percent executes on a single thread, then regardless of how many CPU cores are available the best-case performance on a 4.0GHz Intel CPU would still only be 80fps. I did some quick and dirty napkin math to illustrate:

Reworking the game code so that only 12.5 percent executes on a single thread, maybe even 5 percent, can help. Then 160fps or even 400fps is possible, but that takes developer time that might be better spent elsewhere—and of course CPUs don't have an infinite number of cores and threads. The point is there's a limited amount of time in which to handle all the processing of user input, game state, network code, graphics, sound, AI, etc. and more complex games inherently require more time.

Even with 4GHz and faster CPUs working in tandem with thousands of GPU cores, 6.9ms goes by quickly, and if you're looking at a 240Hz display running games at 240fps, that's only has 4.2ms for each frame. If there's ever a hiccup along the way—e.g., the game needs to load some objects or textures from storage, which could take anywhere from a few milliseconds on a fast SSD to perhaps tens of ms on a hard drive—the game will stutter hard. That's the world we live in.

Let's put it a different way. Modern PCs can potentially chew through billions of calculations every second, but each calculation is extremely simple: A + B for example. Handling a logic update for a single entity might require thousands or tens of thousands of instructions, and all of those AI and entity updates are still only a small fraction of what has to happen each frame. Game developers need to balance everything to reach an acceptable level of performance, and on PCs that can mean the ability to run on everything from an old 4-core Core 2 Quad or Athlon X4 up through a modern Ryzen or 9th Gen Core CPU, and GPUs ranging from Intel integrated graphics up through GeForce RTX 2080 Ti.

It's possible to create games that can run at extremely high framerates. We know this because they already exist. But those games usually aren't state of the art in terms of graphics, AI, and other elements. They're fundamentally simpler in sometimes not so obvious ways. Even reducing game and graphics complexity can only go so far. Seven-year-old CS:GO at 1080p with an overclocked 5GHz Core i7-8700K tops out at around 300fps (3.3ms per frame), with stutters dropping minimum fps to about half that. You can run CS:GO at 270-300fps on everything from a GTX 1050 to a Titan RTX, because the CPU is the main limiting factor.

In short, hitting 144fps isn't just about hardware. It's about software and game design, and sometimes you just have to let go. If you've got your heart set on 144fps gaming, the best advice I can give is to remember that framerates (or frametimes, if you prefer) aren't everything. For competitive multiplayer where every possible latency advantage can help, drop the settings to minimum and see how the game runs, and potentially increase a few settings if there's wiggle room.

Even if you can't maintain 144fps or more, 144Hz refresh rates are still awesome—I can feel the difference just interacting with the Windows desktop. The higher quality 144Hz displays also support G-Sync and FreeSync, which can help avoid noticeable stutters and tearing when you drop a bit below 144fps. Perfectly smooth framerates would be nice, but that alone won't make a game great. So kick back and just enjoy the ride, regardless of your hardware or framerate.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular