When will PC hardware get exciting again?

With CES now behind us, we reflect on the show itself and where PC hardware will go from here.

PC hardware has followed a steady upward trend in performance and features for decades, but if this year's CES is anything to go by, things are continuing to slow down. They haven't come to a grinding halt, just stagnated a bit. So that doesn't mean progress is dead, but CES definitely isn't the place for the latest tech advancements to show up anymore. We've watched and attended CES for over two decades, and based on our impressions as well as discussions with other press, this was the least exciting CES we can recall.

The laptop space will definitely become more interesting with the advent of AMD's Ryzen 7 4000 mobile processors, but it felt like showing something 'new' at CES was a better option than showing nothing at all: 360Hz monitors, chip stacking, and G-Sync TVs were just some of the announcements to come out of CES, but where they go from here is anyone's guess.

Chip stacking is not a snack, but it does sound delicious

Jarred: Intel's big news came in two areas. Besides talking about 10th Gen Core laptops and performance, Intel showed off Tiger Lake and made a public demonstration of a discrete Xe Graphics GPU, running Warframe and Destiny 2.

The dedicated graphics card is actually the less interesting of the two, on a technical level. Intel joining the fray doesn't really change much. We'll need to see significantly higher performance than what was shown at CES, and then we need retail pricing, power requirements, and a test of real-world performance in a bunch of games.

Joanna: It would be nice to get more competition at some point, from a consumer perspective. We have Nvidia and AMD to choose from. That's it. It's great that both companies have come out with mid-range and (sort of) budget options in the last year, but Intel has to find a way to fit into that space while still being competitive. If it can, then things will get interesting.

Jarred: Yeah, AMD and Intel aren't pulling any punches on the CPU front, and I'd love to see AMD, Nvidia, and Intel throw down in the GPU sector. AMD was so far behind Intel just three years ago, with an outdated and under performing sequence of CPUs. Ryzen really changed all that. AMD still can't win every benchmark, but it's winning on overall performance, multithreaded performance, and efficiency. This is why Intel's lack of any major CPU announcements at CES was a bit surprising.

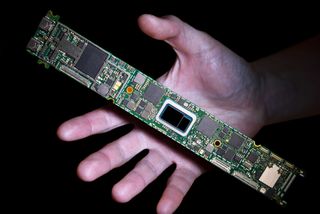

What we got was a rehash about 'performance in the real world', plus a sneak peak at Tiger Lake. Tiger Lake sounds like it will be mobile first, just like Ice Lake, but it does have some interesting things going for it. It's a new CPU and GPU architecture, targeting ultraportable laptops and tablets, but the bigger deal with Tiger Lake is that it's using Foveros, Intel's 3D chip stacking technology. The small sized Tiger Lake chip shown above (in the middle of the board) houses the CPU cores, with Xe Graphics hardware on another layer, and LPDDR4X memory as a bonus.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Joanna: So, in layman's terms, that means faster clockspeeds?

Jarred: Not necessarily, but overall improvements in performance are certainly possible. Chip stacking provides a third dimension to processor scaling, so instead of die sizes getting larger, the dies would just get imperceptibly thicker. Foveros could allow for multiple CPU layers, meaning lots more cores in the same area. Getting the heat out of the inner layers of a 3D chip becomes more complex, but there's a lot that can be done and Foveros is impressive stuff.

Jorge: This pretty much means you can realistically do photo/video editing on a tablet or ultraportable without wanting to pull your hair out.

Joanna: So, it's theoretically better than having a gazillion cores and threads? I'm just trying to think in terms of single core versus multi-core processing. But since faster clockspeeds aren't necessarily the aim of chip stacking, it just brings maybe laptop or desktop performance to smaller form factors like tablets?

Jarred: That's the hope. Foveros potentially allows for more cores and threads than traditional processor designs—so higher core and thread counts with lower clockspeeds would be more likely, rather than fewer cores with higher clockspeeds. Intel also demonstrated a chip that had one 'big' Sunny Cove core with four 'little' Atom cores, which seems more like something you'd see from an ARM tablet. It was in the Lenovo X1 Fold, with a foldable OLED screen. I'm still waiting to see how Intel applies this to desktop PCs, which is my first love in PC hardware.

The big 360

Joanna: Moving on, let's talk monitors. Can the human eye even detect 360Hz?

Jarred: That depends on what you mean by 'detect.' I'm not exactly young, and I've never had the greatest eyesight, but I was able to view 240Hz and 360Hz screens side by side, and there was a slight improvement in aspects of the side-scrolling League of Legends screenshot—basically, text was easier to read. So yes, I could see a difference between various refresh rates and framerates. But does it matter when it comes to playing games?

Joanna: It seems like higher refresh rates are trying to compensate for human reaction time. Even if the human eye can without a reasonable doubt detect higher and higher refresh rates, everyone's reaction time is different, and our reaction times generally decrease as we get older. There have been so many studies on this, even ones that have shown videogames increase reaction time. I remain skeptical to a degree, however. I can definitely tell that my reaction time increases in games like Overwatch because I get used to the bombardment of images, but at what point does my reaction time plateau?

Jarred: Nvidia tried to answer that question with some other had some high refresh rate tests. One involves trying to snipe a guy in custom CS:GO map through a narrow doorway. At 60Hz, I managed exactly one kill out of 50 attempts. At 360Hz I got around 40 out of 50. The reduction in lag was certainly noticeable. In the other test, I had to get as many kills via headshots as possible in 45 seconds. At 60Hz, I scored 15 kills with a lot of 'spray and pray' clicking to hit the slowly moving target. At 360Hz, I scored 21 kills and often didn't need to take more than a single shot.

My main complaint with Nvidia's test scenarios is that we could only test at 60Hz or 360Hz. 360Hz might give competitive esports players a performance bump (Nvidia says around 3-4 percent compared to 240Hz in their research), but for someone at my skill level, 240Hz and 360Hz likely wouldn't matter.

Jorge: Without a side by side comparison, I think most people won't be able to appreciate the difference. It ends up being a situation of something I don't really need but really want after playing some CS: GO on over 300Hz displays. I'd be interested to see which refresh rate hits the 'sweet spot' for competitive gaming.

TVs, now with G-Sync

Jorge: How do we feel about G-Sync coming to TVs now? I think I am more excited about G-Sync TVs than 8K televisions since there's, you know, no 8K content to speak of.

Joanna: I'm more excited about G-Sync TVs as well—but I get the feeling that adding the G-Sync moniker to TVs already on the market is going to jack up the price, even if there isn't actually anything different about that TV from a technical standpoint. I mean, those LG TVs don't have the actual G-Sync hardware in them.

Jorge: I feel like we are about two years away from 8K being a thing. I just like the idea of a gaming-centric smart TV that's over 42" for the living room that's just as fast as the significantly smaller sized display on my desk. If you already use a gaming PC in your living room, one of these 120Hz G-Sync OLED TVs is a killer.

Jarred: Technically Nvidia had a couple of BFGDs already (Big Format Gaming Displays), but the LG TVs take a different route. These G-Sync Compatible OLED TVs use Adaptive Sync, so no G-Sync modules, a tacit admission that expensive G-Sync modules in a TV isn't what people want. Thanks to HDMI 2.1 with VRR (variable refresh rate), which is only supported by Nvidia's Turing GPUs right now, the price is far lower than previous BFGD options like the HP Omen X Emperium. In short, I'd take a 65-inch OLED 120Hz G-Sync Compatible TV over a 65-inch IPS 144Hz G-Sync Ultimate TV any day, considering the former costs about half as much.

As for 8K. No. Very few games can come anywhere near 144fps at 4K and decent settings, so gaming at that resolution isn't at all practical. Quadruple the pixels for 8K is way beyond our current hardware, unless you just want upscaling. Plus, 8K displays mean going backward on refresh rates, and 60Hz panels become the top offerings. Maybe in ten years 8K 120Hz gaming will be a thing we can do at reasonable framerates?

5000MB/s read speeds are so 2019

Jarred: Your state-of-the-art M.2 NVMe PCIe Gen4 SSD from last year? It's now outdated. In 2020, we will see multiple SSDs hit speeds of up to ~7000MB/s. I saw early samples of such drives at Patriot, XPG, and several others. They're all basically the same core hardware, with one of several controller options, but it still won't matter for gaming. These drives doing 7000MB/s are pretty much maxing out the new Gen4 x4 PCIe interface, which is nuts for home users, but datacenters will love it. I want a 4TB drive that can do 7000MB/s as well, not because I need it, but why not?

Jorge: Guess I can throw away my 'M.2 NVMe PCIe 4 Lyfe' shirts I had printed. Now that SSDs are becoming more affordable, I'm glad as an industry we are finally moving away from HDDs. Given the size and demands of some games like Red Dead 2 and the now delayed Cyberpunk 2077, we are easily going to be seeing games over 200GB this year. For content creators who deal in large files, things are looking good.

Jarred: Closely related to SSDs is the topic of DRAM, and things aren't changing much this year. Everyone now makes RGB DIMMs (or will soon), DDR4-3200 to DDR4-3600 seems to be the sweet spot before pricing skyrockets, and timings are pretty good. More companies are showing off enthusiast 32GB DIMMs as well, and Micron and SK Hynix both showed DDR5 modules, though neither one is supported on any current or near-term desktop platforms. We'll probably see DDR5 platforms in 2021.

Joanna: I'm more interested in how the RAM of the future will change how we boot up our computers—how we can restore Windows to its previous state without putting our PC into sleep or hibernation mode. But that's a way off.

In terms of gaming, 32GB is still overkill for the majority of people, not to mention costly even with the prices coming down. RAM can only get faster so many times before it becomes old-hat. Maybe I just get bored easily. I do love RGB lighting on RAM, though. Pretty!

Double rainbows across the PCs

Joanna: Speaking of—sorry, not sorry.

Jarred: To bling or not to bling, that is the question. I have plenty of RGB enabled hardware. I turn off most of the colored lights if I can, because when it's dark and I'm watching a movie or playing a game, I don't want my keyboard, case, mouse, mousepad, etc. to light up the room.

Joanna: But do we feel like this trend is going to desaturate any time soon?

Jorge: RGB is here to stay whether we like it or not. There were a couple of hardware manufacturers at CES dipping their toes into ambient lighting geared toward streamers. The idea is that your entire living room will flash red whenever your health is low in Apex Legends or put on a celebratory light show when you get a new follower. I immediately rolled my eyes at the idea but then thought, what if my lights went nuts whenever someone liked a photo of my dog on Instagram? I'd be into that.

Jarred: I'm still rolling my eyes. Anyway, I don't think I saw any new RGB-ified hardware at CES, but that's probably because everything that can be lit is already glowing. Wait! AMD and Intel don't technically make RGB CPUs—the lighting is on the CPU cooler. They should fix this. Maybe add mini LEDs around the edge of the CPU in a way that will still show up when the PC is powered on? (This is sarcasm. Don't listen to me.)

Joanna: Seriously though, there is one thing I want from RGB-enabled anything: an obvious button that will shut the damn lighting off when my computer is off. I don't want to have to go into the BIOS or sort through the options in a software utility, or unplug my keyboard from my tower whenever my cat steps on my keyboard.

Jorge: The PC Gamer most requested RGB feature? Turning it off.

Most Popular