What DirectX 12 means for gamers and developers

The Modern Game Development Ecosystem

Of course, moving all these responsibilities from the API and driver level to the game itself will increase the code size and development effort for the latter. In fact, a common argument in favor of higher-level interfaces in all areas of computing is shielding the programmer from dealing with all the complexities of each underlying system. However, the way most modern games are built works out in favor of low-level APIs in this regard.

Unlike during the 90s or early 00s, it’s very rare these days for any game to be based on a one-shot engine built directly on top of a given graphics APIs. Many large publishers have their own in-house engine teams dedicated purely to keeping their technology up to date, and even independent developers have plenty of high-quality, professionally maintained engines to choose from. As such, the increased programming complexity of low-level APIs will in many cases be absorbed by middleware developers—who have the resources and expertise to deal with these challenges—rather than hitting game developers directly.

Additionally, just because an API is not required to check the correctness of everything it is asked to do—which can be expensive in terms of performance—does not mean that it is not allowed to do so. All low-level APIs offer optional validation layers which should help mitigate developer’s increased responsibilities. Nonetheless, tool support always lags behind the introduction of any new technology, and it will take some time for tools to catch up to where they are for established APIs.

How do low-level APIs work?

Clearly, there are significant incentives from both the hardware and software perspective for a clean slate, low-level approach to graphics API design, and the current engine ecosystem seems to allow for it. But what exactly does “low-level” entail? DirectX12 brings a plethora of changes to the API landscape, but the primary efficiency gains which can be expected stem from three central topics:

- Work creation and submission

- Pipeline state management

- Asynchronicity in memory and resource management

All these changes are connected to some extent, and work well together to minimize overhead, but for clarity we’ll try to look at each of them in turn isolated from the rest.

Work Creation and Submission

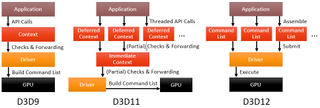

The ultimate goal of a graphics API is for the program using it to assemble a set of instructions that the GPU can follow, usually called a command list. In higher-level APIs, this is a rather abstract process, while it is performed much more directly in DirectX 12. The following figure compares the approaches of a ‘classical’ high-level API (DirectX 9), with the first attempt at making parallelization possible in DirectX 11 and finally the more direct process in DirectX12.

In the good old days of annually increasing processor frequencies, what Direct3D 9 offered was just fine: the application uses a single context to submit draw calls, which the API checks and forwards to the driver, which in turn generates a command list for the GPU to consume. All of this is a sequential process, so in the age of consumer multicore chips a first attempt was made to allow for parallelization.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It manifested in the Direct3D 11 concept of deferred contexts, which allow games to submit draw commands independently in multiple threads. However, all of them still ultimately need to pass through a single immediate context, and the driver can only complete the command list once this final pass has happened. Thus, the D3D11 approach allows for some degree of parallelism, but still causes a heavy load imbalance on the thread executing the tail end of the process.

Direct3D 12 cuts out the metaphorical middle man. Game code can directly generate an arbitrary number of command lists, in parallel. It also controls when these are submitted to the GPU, which can happen with far less overhead than in previous concepts as they are already much closer to a format the hardware can consume directly. This does come with additional responsibilities for the game code, such as ensuring the availability of all resources used in a given command list during the entirety of its execution, but should in turn allow for much better parallel load balancing.

Pipeline State Management

The graphics or rendering pipeline consists of several interconnected steps, such as pixel shading or rasterization, which work together to render a given 3D scene. Managing the state of various components in this pipeline is an important task in any game, as this state will directly influence how each drawing operation is performed and thus the final output image.

Most high-level APIs provide an abstract, logical view of this state, divided into several categories which can be independently set. This provides a convenient mental image of the rendering process, but does not necessarily lend itself to high draw call throughput with low overhead.

The figure above, while greatly simplified, illustrates the basic issue. Many independent high-level state descriptions can exist, and at the start of any draw call any subset of them may be active. As such, the driver has to build the actual hardware representation of this combination of states—and maybe even check its validity—before it can start actually performing the draw call. While caching may alleviate this issue, it means moving complexity to the driver and running the risk of unpredictable and hard to debug behavior from the application perspective, as discussed earlier.

In DX12, state information is instead gathered in pipeline state objects. These are immutable once constructed, and thus allow the driver to check them and build their hardware representation only once when they are created. Using them is then ideally a simple matter of just copying the relevant description directly to the right hardware memory location before starting to draw.

Asynchronicity in Memory and Resource Management

Direct3D 12 will allow—and force—game developers to manually manage all resources used by their games more directly than before. While higher level APIs offer convenient views to resources such as textures, and may hide others like the GPU-native representation of command lists or storage for some types of state from developers completely, in D3D12 all of these can and must be managed by the developers.

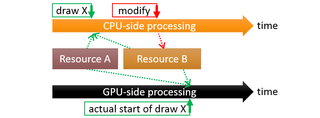

This not only means direct control over which resources reside in which memory at all times, but also makes game programmers responsible for ensuring that all data is where the GPU needs it to be when it is accessed. The GPU and CPU have always acted independently from each other in an asynchronous manner, but the potential problems (e.g. so-called pipeline hazards) arising from this asynchronicity were handled by the driver in higher-level APIs.

To give you a better idea why this can be a significant performance and resource drain, the figure above illustrates a simple example of such a hazard occurring. From the CPU side, the situation is clear-cut: a draw call X utilizing two resources A and B is performed, and later on resource B is modified. However, due to the asynchronous nature of CPU and GPU processing, the GPU may only start actually executing the draw call in question significantly after the game code on the CPU initially performed it, and after B has already changed. In these situations, drivers for high-level APIs could in the worst case be forced to create a full shadow copy of the required resources during the initial call. In D3D12, game developers are tasked with ensuring that such situations do not occur.

In Summary

Tying it all together, the changes discussed above should result in a lean API which has the potential to aid in implementing games that perform both better and, perhaps even more importantly, with improved consistency.

Application-level control and assembly of command lists allows for well-balanced parallel work distribution and thus better utilization of multicore CPUs. Managing pipeline state in a way which more closely mirrors actual hardware requirements will reduce overall CPU load and in particular result in higher draw count throughput. And manual resource management, while complex, will allow game developers to fully understand all the data movement in their game. This insight should make it easier for them to craft a consistent experience and perhaps ultimately eliminate loading/streaming-related stutter completely.

On the next page: What DirectX 12 actually means for PC gamers, present and future.

Most Popular