This new AI can mimic human voices with only 3 seconds of training

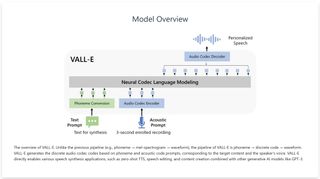

Vall-E, being developed by a team of researchers at Microsoft, uses an all-new system for learning how to talk.

Humanity has taken yet another step toward the inevitable war against the machines (which we will lose) with the creation of Vall-E, an AI developed by a team of researchers at Microsoft that can produce high quality human voice replications with only a few seconds of audio training.

Vall-E isn't the first AI-powered voice tool—xVASynth, for instance, has been kicking around for a couple years now—but it promises to exceed them all in terms of pure capability. In a paper available at Cornell University (via Windows Central), the Vall-E researchers say that most current text-to-speech systems are limited by their reliance on "high-quality clean data" in order to accurately synthesize high-quality speech.

"Large-scale data crawled from the Internet cannot meet the requirement, and always lead to performance degradation," the paper states. "Because the training data is relatively small, current TTS systems still suffer from poor generalization. Speaker similarity and speech naturalness decline dramatically for unseen speakers in the zero-shot scenario."

("Zero-shot scenario" in this case essentially means the ability of the AI to recreate voices without being specifically trained on them.)

Vall-E, on the other hand, is trained with a much larger and more diverse data set: 60,000 hours of English-language speech drawn from more than 7,000 unique speakers, all of it transcribed by speech recognition software. The data being fed to the AI contains "more noisy speech and inaccurate transcriptions" than that used by other text-to-speech systems, but researchers believe the sheer scale of the input, and its diversity, make it much more flexible, adaptable, and—this is the big one—natural than its predecessors.

"Experiment results show that Vall-E significantly outperforms the state-of-the-art zero-shot TTS system in terms of speech naturalness and speaker similarity," states the paper, which is filled with numbers, equations, diagrams, and other such complexities. "In addition, we find VALL-E could preserve the speaker’s emotion and acoustic environment of the acoustic prompt in synthesis."

You can actually hear Vall-E in action on Github, where the research team has shared a brief breakdown of how it all works, along with dozens of samples of inputs and outputs. The quality varies: Some of the voices are notably robotic, while others sound quite human. But as a sort of first-pass tech demo, it's impressive. Imagine where this technology will be in a year, or two or five, as systems improve and the voice training dataset expands even further.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Which is of course why it's a problem. Dall-E, the AI art generator, is facing pushback over privacy and ownership concerns, and the ChatGPT bot is convincing enough that it was recently banned by the New York City Department of Education. Vall-E has the potential to be even more worrying because of the possible use in scam marketing calls or to reinforce deepfake videos. That may sound a bit hand-wringy but as our executive editor Tyler Wilde said at the start of the year, this stuff isn't going away, and it's vital that we recognize the issues and regulate the creation and use of AI systems before potential problems turn into real (and real big) ones.

The Vall-E research team addressed those "broader impacts" in the conclusion of its paper. "Since VALL-E could synthesize speech that maintains speaker identity, it may carry potential risks in misuse of the model, such as spoofing voice identification or impersonating a specific speaker," the team wrote. "To mitigate such risks, it is possible to build a detection model to discriminate whether an audio clip was synthesized by VALL-E. We will also put Microsoft AI Principles into practice when further developing the models."

In case you need further evidence that on-the-fly voice mimicry leads to bad places:

Andy has been gaming on PCs from the very beginning, starting as a youngster with text adventures and primitive action games on a cassette-based TRS80. From there he graduated to the glory days of Sierra Online adventures and Microprose sims, ran a local BBS, learned how to build PCs, and developed a longstanding love of RPGs, immersive sims, and shooters. He began writing videogame news in 2007 for The Escapist and somehow managed to avoid getting fired until 2014, when he joined the storied ranks of PC Gamer. He covers all aspects of the industry, from new game announcements and patch notes to legal disputes, Twitch beefs, esports, and Henry Cavill. Lots of Henry Cavill.

After closing its AAA games development studio, Netflix Games VP transforms into the VP of GenAI for Games and the gobbledygook must flow: 'a creator-first vision… with AI being a catalyst and an accelerant'

OpenAI has bought the URL of what used to be an adult video chat website for more than $15,500,000

Most Popular