Mesh shaders explained: What are they, what's the big fuss, and why are they only in Alan Wake 2?

Why it's taken so many years for mesh shaders to actually be used in a game.

When it was first announced that Alan Wake 2 needs a graphics card that supports mesh shaders to run, PC gamers with AMD RX 5000-series or Nvidia GTX 10-series GPUs were immediately disappointed. It turns out the game will actually run without them, but the performance is so poor that mesh shader support really is a must. So what the hell are they and why do they have such a big impact on frame rates?

TL;DR version to begin with. Mesh shaders let game developers process polygons with far more power and control than the old vertex shaders ever did. So much so, that games of the near future could be throwing billions of triangles around the screen and your GPU will just breeze through it all.

If you're wondering how on earth that could be possible, then carry on reading to find out!

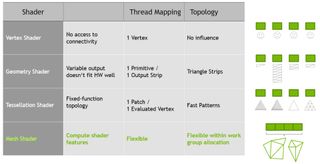

As most PC gamers will know, the world of a 3D game starts life as an enormous collection of triangles. The first main stage of rendering involves moving and scaling these triangles, before starting the long process of applying textures and colouring in pixels. In the graphics API Direct3D, the blocks of code used to do all of this are called vertex shaders (a vertex is a corner of a triangle).

There are actually a few more of them, such as hull, domain, and geometry shaders, though these don't have to be used. But with them, developers can take the models and environments created by the game's designers and bring them to life on our monitors. In the majority of the games we play today, the vertex processing stage isn't particularly demanding.

This is because most games don't use huge amounts of triangles to create the world. Developers kept this aspect quite light, instead using vast numbers of compute and pixel shaders to make everything look good. For the most part, at least. Scenes that show close-up shots of characters' faces and bodies are different, and here the vertex load increases quite a bit.

Even then, it's still not the bottleneck in the rendering performance. That's very much in the pixel processing stage of things, as it is in most games. However, one can achieve only so much by using a relatively small number of triangles and the ever present push for better and more realistic graphics means only one thing: More vertices to crunch through.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

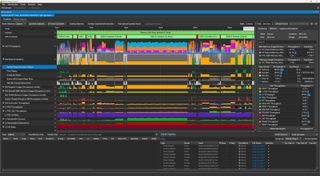

The image below shows a breakdown of all the GPU processing required to produce a single frame of graphics in Cyberpunk 2077. In terms of graphics settings, everything is being used, including path tracing and upscaling.

The part to focus on is labelled Vertex/Tess/Geometry Warps and you can see (or perhaps not, as it's so small) that compared to everything else, the vertex workload is tiny.

So you'd be forgiven for thinking that the game could have way more triangles everywhere and still run just fine. However, it's not just about the number of triangles to process, but also how easy it is to manage them all.

This is where the traditional vertex processing stage in Direct3D hits a bit of a wall. By design, it's very fixed in what a developer can do, and it requires every single triangle to be processed before anything else can be done. This is especially limiting if you know that a large number of these objects just aren't going to be visible.

If you want to get rid of them (aka culling), you either have to do it before you issue any commands to the GPU or you discard them after all the triangles have been vertex shaded. That's not very efficient and if you want to do anything more complicated in the old Direct3D vertex pipeline (the algorithm of triangle processing), you've really got your work cut out.

This is partly why games don't have such a massive vertex load. Basically, vertex shaders just get in the way.

To solve this problem, Microsoft introduced mesh shaders in beta format back in 2019, before making them part of the public Direct3D release in 2020 (the whole shebang was called DirectX 12 Ultimate). Nvidia had already promoted mesh shaders long before they were a part of Direct3D, showcasing the possibilities with its 2018 Turing GPU architecture and a sweet asteroids demo.

So you'd think more developers would have switched on to them. But it wasn't until 2021 that UL Solutions announced a mesh shader feature test for 3DMark and the odd developer showcase popping up a year later. Pretty much nothing much else happened until Alan Wake 2. But what exactly are mesh shaders?

The best way to understand what mesh shaders are, is to think of them as being the compute shader for triangles. They offer far greater flexibility in how large groups of triangles, called meshes, can be processed. For example, one can split up a big mesh into lots of smaller groups of triangles (which Microsoft call meshlets) and then distribute the processing of them across multiple threads.

Since mesh shaders were designed to be very similar to compute shaders, they tap into the fact that modern GPUs have been extremely good at massively parallel compute work for many years. Along with the accompanying amplification shader, game developers now have far more control over the processing of triangles, especially regarding how and when to cull them. All of that means the game can use even more detailed models, packed to the hilt with polygons.

But if mesh shaders are so damn awesome, why has it taken so long for them to appear in an actual game? After all, the feature test in 3DMark shows the potential performance uplift is substantial. Well, there are a couple of reasons behind it all.

The first of which is that the hardware running the game needs to actively support mesh shaders. In the case of AMD GPUs, you need one that's RDNA 2-based or newer, and for Nvidia, you need a Turing, Ampere, or Ada Lovelace GPU. You might look at this and think that's more than enough GPUs with the feature, and Turing came out in 2018.

When ray tracing hardware came out, games using the technology followed almost immediately, so why didn't they do the same for mesh shaders? Well, we can't ignore the impact that consoles have in all of this, and the first one to have mesh shaders was the Xbox Series X/S. In theory, the PS5's chip can do them but since Sony uses its own graphics API, developers can't just use one approach to vertex processing across all platforms.

Unreal Engine 5 uses mesh shaders as part of its Nanite feature, but complimentary to the system, not as a replacement. How many UE5 games are currently available that heavily use Nanite? I don't think you'll need more than one hand to list them all.

We're only starting to see developers utilise the full feature set of consoles and the latest engines now, almost three years after Microsoft officially launched DirectX 12 Ultimate, which tells you everything you need to know about how long it takes to really dig into a new technology and implement it well in a game.

Take DirectStorage, for example, that Microsoft released last year. This was another feature added to DirectX 12 Ultimate and it's another system that removes performance bottlenecks caused by how old algorithms worked. Without it, games have to copy every model and texture into system memory first, before transferring it across to the graphics card's VRAM.

DirectStorage allows GPUs to directly grab stuff straight off the storage drive and manage the decompression of files, too. With the inclusion of this API, games that stream lots of assets can bypass the CPU and system RAM, removing a potential bottleneck in performance.

However, as things currently stand, only one PC game uses it and it's Forspoken, which sadly wasn't the best of games to showcase its use. More will come, of course, but it will take time to properly get the best from it, and retro-fitting it to older games won't help, because they weren't designed to superstream data, unlike the latest consoles.

Similarly, there's no point in adding a mesh shader pipeline to an old game, as they're not performance limited by the vertex processing stage, whereas slapping in a spot of ray tracing produces an immediate wow factor (or meh factor, depending on your view of RT).

To really make the most of mesh shaders, developers need to create a whole set of polygon-rich models and worlds, and then ensure there's a large enough user base of hardware out there, to guarantee sales of the game will be good enough to justify the time spent on going down the mesh shader route.

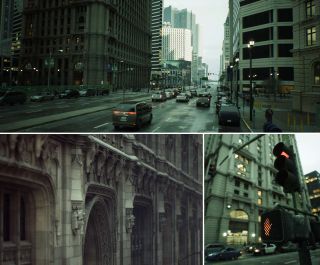

This is precisely what Remedy did with Alan Wake 2. If you have the right supporting hardware, the game looks fabulous and runs really well; you don't even need an absolute beast of a graphics card (though it does help) to enjoy the visuals.

So while it's taken a good few years for mesh shaders to make a proper appearance, there's no doubt that developers will take a leaf from Remedy's book and take full advantage of them in forthcoming releases. 2023 might be the year that ray tracing really started to show its potential, but 2024 could well be the year of the mesh shader.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?

Most Popular