Intel is fighting a battle between performance and security

Why it took more than 10 years for many of these flaws to come to light.

The past two years have been rough on Intel CPUs—and no, I'm not talking about AMD's sudden increase in competitiveness with its Ryzen CPUs, or the repeated delays with Intel's 10nm process. I'm talking about security flaws. In early 2018, Intel and security researchers revealed two exploits, dubbed Meltdown and Spectre, that affect nearly all 'modern' Intel CPUs. And as we warned with Meltdown and Spectre, those were just the proverbial tip of the iceberg. Since then, numerous other exploits have been discovered, the latest being the MDS attacks (Microarchitectural Data Sampling, including the most recent RIDL and Fallout attacks) that again affect Intel CPUs going back as far as the first generation Core i7 parts.

The good news is that patches and mitigations were largely able to address the problems. The bad news is that there was a loss in performance—sometimes minimal, sometimes not. That didn't stop the lawyers, naturally: over 32 class-action lawsuits were filed against Intel in early 2018, and I'm sure that number has increased in the following months. There's more bad news: we're going to see more 'similar' exploits during the coming years. At this point, it feels inevitable.

What's the deal with all these new CPU security vulnerabilities—where do these exploits come from, and how could these sometimes severe vulnerabilities go undiscovered for so long? Not surprisingly, it's a pretty complex topic. Collectively, nearly all of the exploits are classified as side-channel attacks: they don't go after data directly, but use other methods to eventually get what they're after. It goes back to many of the fundamentals of modern CPU designs. Let's just run through some techno-babble for a moment if you'll indulge me.

L1/L2/L3 caches: Caches are basically fast memory access so that your CPU doesn't end up waiting on slow DRAM (which in turn is basically a cache for even slower permanent storage—your HDD and/or SSD). Meltdown uses a side-channel attack to cause the cache to divulge 'secrets' (protected data in main memory).

Speculative execution: Modern CPUs can fetch, decode, and execute up to six instructions per clock cycle, for each core. Every time a branch occurs in the code, however, a CPU has to guess where the next instructions will come from. It 'speculates' and starts executing those instructions so that all the hardware isn't just twiddling thumbs. Spectre uses knowledge of branch prediction and speculative execution to try to get at data it's not supposed to access.

Pipelines and buffers: Imagine a factory assembly line, where each stage does one portion of the work. For a toy, one stage might put on the arms, another the legs, then the head, then paint, then stickers. Whatever. CPUs have used pipelining in a similar way, breaking the work for each machine instruction (eg, add two numbers) into multiple stages—20-30 stages is pretty common these days. To make all of these stages work together, CPUs have lots of buffers to hold data, so that everything can run as fast as possible. RIDL, Fallout, and other MDS-class attacks can use these various buffers to leak data.

Hyper-Threading: Also known as SMT (Simultaneous Multi-Threading, for the non-Intel world of CPUs), Hyper-Threading allows two separate instruction threads to run concurrently on the same CPU core, sharing some resources. This goes back to improving the use of resources in superscalar designs. Above I said that a modern CPU can fetch/decode/execute up to six instructions per clock for each core, but it will only do so if it can fill all available slots. Finding instructions that can be executed independently of one another (instruction level parallelism) is difficult, especially when a CPU needs to find six instructions per cycle.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Thread level parallelism (ie, SMT) gets around this by using instructions from two different threads, which inherently means the instructions are independent of one another. Now instead of trying to fill six slots from one thread (hard to do), a CPU can fill three slots from one thread and the other three slots from a second thread (easier to do). Fallout and other MDS attacks can be easier to implement if Hyper-Threading is active.

What does all of this mean?

Even if you don't understand what the above features mean on a technical level, the net result of each feature is to try and make our CPUs faster. If we got rid of caches, branch prediction, superscalar architectures, Hyper-Threading, SGX, and all sorts of other techno-babble features, it would make it far easier to build a 'secure' CPU. Unfortunately, that CPU would also have a fraction of the performance of a modern AMD Ryzen or Intel Core processor. Intel is fighting a battle between performance and security, and we're caught in the crossfire.

Intel is fighting a battle between performance and security, and we're caught in the crossfire.

For decades, CPU designs have basically focused on making our processors faster and more efficient. All that speed is great for getting work done, but now we're discovering a dark secret: those speed hacks also leave potential vulnerabilities. The complexity of making some of these side-channel attacks work is high, but it only takes one functioning solution to pave the way for many clones. Now that we've seen several proof of concept exploits, there's a ripple effect where new and different approaches are also bearing fruit. It's forcing CPU architects to go back and reexamine everything that's done to ensure there are no critical flaws and exploits. Needless to say, that's a difficult task.

Consider Intel's SGX (Software Guard eXtensions), which are supposed to help seal off and protect data in an encrypted enclave. Good in theory, but another exploit (Foreshadow) shows that SGX isn't quite working as intended, and when a single SGX machine is compromised, it can basically mess up the whole SGX ecosystem (all the other PCs and servers in an enterprise network). One step forward, one step back.

Intel, AMD, ARM, IBM, and any other companies making modern CPUs (and GPUs as well, potentially) now have the added need to build in stronger security features. That means more resources will be spent on securing processors, rather than making them faster and/or more efficient. It's a balancing act that has always existed, but now the scales are tipping more toward security than performance.

What can be done to prevent these exploits?

We're not dead in the water, thankfully. Even with these exploits 'known,' actually implementing attacks takes time as well as access. The code for an attack needs to run on your local PC hardware to work at all. That seems like a big jump, though JavaScript running via a website can in some cases pull off some of these exploits. Even without killing JavaScript, work is being done.

One change is to the way our operating systems function, which has already happened in various ways. Meltdown was largely mitigated by KPTI (Kernel Page-Table Isolation) and KAISER (Kernel Address Isolation to have Side-channels Efficiently Removed), implemented at the OS level. But these changes were not without a cost. Depending on the workload, the KPTI fixes could reduce performance by anywhere from 5 to 30 percent. That latter number is alarming, but thankfully it doesn't usually apply to home users—it's more of an issue on servers. Software and firmware mitigations for other exploits have also reduced performance in some cases, again based on workload.

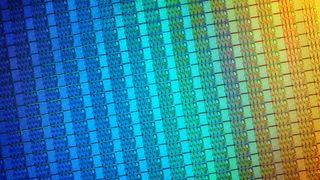

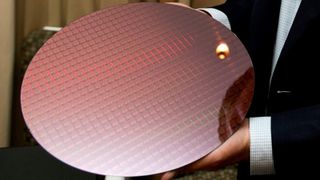

A more permanent solution is to build features into CPUs that prevent these attacks at a hardware level without the loss of performance. Nice in theory, but that's easier said than done. Just look at the acronym MDS: Microarchitectural Data Sampling. All aspects of a CPU microarchitecture—the billions of transistors that go into modern processors—are potential areas of data leakage. Figuring out how to close all the loopholes, permanently, takes time and resources and may not even be practical. In the interim, all existing CPUs—including as yet unreleased CPUs where the main architectural details have already been finalized—will remain vulnerable.

For future CPUs, Intel will rework architectural elements to better prevent attacks. I'm sure smart engineers (much smarter than me) are already figuring out how to do this with as little impact to performance as possible. But the final solution will require at the very least a new CPU, and possibly new motherboards, GPUs, RAM, and software in a worst-case scenario—at least if you want to be fully safe. And yet here I sit writing on a 5-year-old laptop, mostly unconcerned about these exploits—in part because I'm not running random apps from the Internet, nor am I visiting sketchy websites. But it's still a risk.

Ultimately, as I've said before, this isn't just Intel CPUs that are vulnerable. These exploits existed for more than a decade (sometimes much longer), and there was no public knowledge of them. That also means CPU and integrated circuit architects in general were unlikely to intentionally prevent these undiscovered exploits. Now that these side-channel attacks are a known problem, changes can and will be made, but don't be surprised if more vulnerabilities—including ones for non-Intel processors—surface in the coming years.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular