I'm finding it hard to get too excited about AMD's upcoming tech when it's all marketing and no meat

The Financial Analyst Day 2022 had AMD execs talking a lot, but actually saying very little.

I guess I shouldn't be surprised not to get any really juicy details about AMD's imminent CPU and GPU generations from Financial Analyst Day (FAD) 2022. After all, it's more about marketing where AMD is right now and where it's going in the next couple of years, so analysts can wax lyrical about why investors should throw money at Dr. Lisa T Su et al.

A FAD then is not so much about what the next-generation of Ryzen CPU and Radeon GPU hardware is going to do, or how it might plan to implement different technological wizardry in order to do it.

All we really got out of the different presentations—from such AMD luminaries as Mark 'The Papermaster' Papermaster, Rick Bergman, and David Wang—was a selection of marketing buzzwords and a few extraneous performance-per-watt percentage gains.

Which makes it hard to get too excited about what's coming up from the red team. Though given that the jaws of over-hyped expectation have bitten AMD in the collective posterior previously, maybe that's not such a bad thing.

Maybe the low-hanging fruit has already been picked from the Zen tree.

There was, however, the confirmation we are looking at an ~8% IPC uplift for the new Zen 4 desktop processors, which we'd kinda figured out from the combination of IPC and frequency making up the 15% gen-over-gen gains AMD touted at its Computex keynote last month.

We've been spoiled by previous generational performance uplifts from AMD's processor design teams, to the point where a collective single thread gain in Cinebench R23 of 35% has been largely ignored because the actual Zen 4 architectural gains are expected to be less than 10%. Maybe the low-hanging fruit has already been picked from the Zen tree.

It's worth remembering that, when things were stagnating over at Intel before it got its node game back on track, all you could expect of what were then the best new CPU generations was a 10% bump. So really, any real-world increase over and above that figure, whether that's because AMD's new chips can happily chow down on more power, or because they run at higher frequencies… well, that's just dandy.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

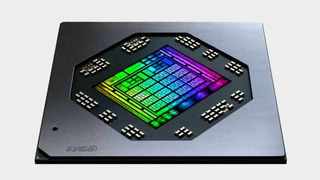

I guess I was foolishly hoping we'd get something more officially concrete from AMD about its next-gen GPUs than some vague noises about chiplet packaging and DisplayPort 2.0 support confirmation. I want to be excited about AMD's new cards, and not just have to divine the trickles of truth from within the torrents of rumours, leaks, and speculation consistently cluttering up Twitter.

Because the GPU rumours sound potentially very exciting, but I now want the truth, damn it. Yeah, I'm impatient...

It's the chiplet thing which is the most intriguing, and something we've been speaking about for years, but it's a shame there's no meat on the marketing bones of these presentations. Is AMD actually using a chiplet design in the same way as it has with Ryzen, and by that I mean is it going to have multiple actual GPU chiplets to really pump up the shader counts?

All David Wang says about the 'advanced chiplet packaging' of the upcoming RDNA 3 GPU design is: "It allows us to continue to scale performance aggressively without the yield and the cost concerns of a large monolithic silicon.

"It allows us to deliver the best performance at the right cost."

From a financial perspective that's what you want to hear, and is one of the reasons the chiplet-based Ryzen CPUs were so successful from both a technical and manufacturing point of view.

You’re talking about doing CrossFire on a single package

David Wang, AMD

But I spoke to Wang a few years ago about a potential shift to MCM designs, and he laid out the difficulties inherent in using multiple GPU chiplets in gaming and visual terms.

"To some extent you’re talking about doing CrossFire on a single package. The challenge is that unless we make it invisible to the ISVs [independent software vendors] you’re going to see the same sort of reluctance.

"We’re going down that path on the CPU side," Wang continues, "and I think on the GPU we’re always looking at new ideas. But the GPU has unique constraints with this type of NUMA [non-uniform memory access] architecture, and how you combine features… The multithreaded CPU is a bit easier to scale the workload. The NUMA is part of the OS support so it’s much easier to handle this multi-die thing relative to the graphics type of workload."

You can see that in the confirmation of 3D chiplet packaging for the CDNA 3 GPUs for the Radeon Instinct crew, that we're likely to see multiple compute chiplets on that side of things, but on the RDNA 3 side, I'm not convinced multi-GPU is ready for prime time gaming in a chiplet form.

So, what could this chiplet setup be? The latest rumours suggest, even for the top two Navi 3x GPUs which are expected to be chiplet-based, those will only come with a single graphics compute die (GCD) with the actual chiplet packaging noises referencing the splitting off of the GPU's cache onto separate multi cache dies (MCDs).

That would make things simple from a straight gaming performance point of view, and actually mean you can focus most of the die-size of a GCD on the actual core graphics stuff, shaving off the space you'd normally have for the cache memory. And if the IO and memory bus interconnects are shifted off the GCD that also frees up a lot of die space, too.

But again we're resorting to rumours to get engaged and excited about the possibilities. I'm just impatient; I'm sure it won't be that long until AMD actually gives us something chonky, architecture-wise, to get our teeth into. Maybe.

Though with the expected RDNA 3 release window to start sometime in October, and that only being a mid-range kick off show, and a monolithic one at that, let's just say I'm not holding my breath.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.

Most Popular