How Emulators Work

Take your rig to strange new worlds

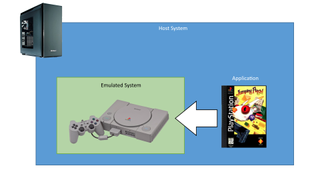

Emulators are a class of computer software that allow one computer system, the host, to simulate a different operating system, in order to run an application meant for the foreign system. There's a good chance you've messed around with emulators before, if you've downloaded a console emulator, for instance.

In this article we’ll take a look at various kinds of emulators and how they work.

Do you want it low or high?

Emulators come in two different flavors: low level and high level. The difference is primarily how emulation is done.

Low-level emulation

Low-level emulation (LLE) simulates the behavior of the hardware to be emulated. The host computer will create an environment for the application to run where it’ll be processed, as closely as possible, as the emulated hardware would do it. For the most accurate emulation, not only are all the components simulated, but their signals as well. The more complex the system, either by having more chips or a complicated one, the more difficult it becomes to do LLE.

LLE can be achieved via hardware or software. In hardware, the actual hardware or something that can substitute it resides in the system itself. The PlayStation 3 in its first two models did hardware emulation by containing the actual hardware used in the PlayStation 2. Older Macintosh computers had an add-on card, called the MS-DOS Compatibility Card, that contained a 486 processor–based system to run x86 applications.

Software low-level emulation is as it sounds, it simulates the hardware using software. Many retro video game consoles and 8-bit home computers are emulated this way by using well understood components (it’s harder to find a popular system that didn’t use the venerable MOS 6502 or Zilog Z80). One aspect that can make or break an emulation is how often it syncs up each emulated component. For example, the SNES emulator Higan aims to be very accurate by increasing the amount of times the components sync up with each other. This allows for games that had timing hacks or other quirks of timing to be playable. The cost of this however, is that Higan requires a very fast processor relative to what it’s trying to emulate.

High-level emulation

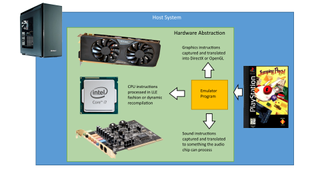

High-level emulation (HLE) takes a different approach to simulating a system. Instead of trying to simulate the hardware, it simulates the functions of the hardware. In the mid-'90s, hardware abstraction was spreading to more computer systems, including video game consoles. This allowed for ease of programming as now developers didn’t have to invent and reinvent the wheel.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Hardware abstraction is a way of hiding the intricate details of controlling hardware. Instead, it provides a set of actions that a developer commonly uses and does all the little details automatically. An example is how storage drive interfaces came about. Originally, if a developer wanted to read data from a drive, they had to command the drive to spin up, position the read/write head, and get the timing down to read the data, pull the data, then transfer it over. With hardware abstraction, the developer commands “I want to read at this place” and the firmware on the drive takes care of the rest. An HLE takes advantage of hardware abstraction by figuring out what the command(s) are intended to do in the emulated environment,

HLE has three primary methods of simulating functions of the hardware.

- Interpreting: The emulator executes the application’s code line by line, by mimicking what each instruction is supposed to do.

- Dynamic Recompiling: The emulator looks at chunks of the application’s processor instructions and sees if it can optimize them to run better on the host computer’s processor. This is opposed to running each instruction one by one, which usually results in lookup overhead penalties.

- Lists interception: Co-processors, like the GPU and audio chip, that have enough hardware abstraction require the main processor to send command lists. These are a series of instructions that tell the co-processor what to do. The emulator can intercept the command list and turn it into something the host computer can process on a similar co-processor. For example, command lists going to the emulated system’s GPU can be intercepted and turned into DirectX or OpenGL commands for the host’s video card to process.

An example of an HLE is the Java Virtual Machine (JVM). Java code is not actually compiled and run natively on the host machine, but instead, the host machine runs an emulator of a theoretical Java machine. Applications made for Microsoft’s .NET framework also run in this fashion. This way of running an application is commonly known as just-in-time (JIT) compiling.

The performance HLEs can provide is such that it was possible to emulate the Nintendo 64 on a Pentium II processor in 1999, three years after the console’s release. In fact, this is the most likely way the Xbox One can emulate the Xbox 360, despite running hardware that isn’t vastly superior to it.

Which type to use? That depends...

So, which is the best type to use?

Low-level emulation is one of the most accurate ways to simulate the system in question, since it’s replicating the behavior of the hardware. However, hardware-based emulation isn’t always feasible as it adds cost to the system. Software-based emulation, however, requires intimate knowledge of the system or its parts, which may not be possible if documentation for it is scarce. It also requires a system much more powerful than the original to run applications at the same speed. LLE is often limited to either much older systems, prototype emulators that are getting a handle on things, or lesser components of a system like an I/O controller.

High-level emulation, on the other hand, allows a system with complex hardware to be emulated on something only a bit more powerful. It may also allow one without intimate knowledge of the hardware to emulate it. However, because an HLE can only provide the functions of the hardware, it may not be able to emulate special features specific to the hardware or any hacks that the developers used to do something beyond the norm. In recent history, however, developers have come to rely far less on hacks. Most current developers use industry-standard APIs, making it not only easier to emulate the system, but quite possibly to run the application with better performance than the original. This is the approach most emulators of more modern systems are taking.

What about virtual machines? WINE?

Virtual machines can be thought of like partitions on a storage drive. When you create two partitions on a storage drive, the OS creates what appears to be two separate physical drives. Likewise, a virtual machine uses a portion, either some or all, of the computer’s hardware resources to create an entire machine and act as a single, separate computer.

While emulators are technically virtual machines, the typical usage for virtual machines are not to emulate a completely different system. Creating a virtual machine on an x86-based system can only run x86 applications. However, depending on where the virtual machine manager is run, it may need to emulate some hardware to make the OS it’s running happy. For instance, Windows does not provide a way to share the video card with virtual machines. Virtual machine managers for Windows have to emulate a video card.

WINE, an application for Linux- and UNIX-based systems to run Windows programs, is also not an emulator. It’s even in the name: WINE Is Not an Emulator. As most Windows programs are compiled on the x86 processor and a lot of people who use Linux or UNIX are also using x86 processors, WINE doesn’t emulate anything hardware-related. Instead, it’s known as a compatibility layer application, providing a subsystem in the OS to run another OS’s applications.

Most Popular