How are microchips made, anyway?

Creating a modern microprocessor is an incredibly complex undertaking.

Modern PCs are built out of a collection of seven primary components: the CPU, GPU, RAM, storage, motherboard, case, and power supply. Each of these—yes, even the case—now contains anywhere from a few microchips to potentially hundreds of chips that handle a wide variety of tasks. Audio, communication, graphics, storage, and of course the CPU, aka central processing unit. The primary building block of our modern computers is the silicon microchip, but what exactly is a microchip and how is it made? It's a fascinating and incredibly complex topic, and for this week's Tech Talk I'm going to give the ultra condensed version.

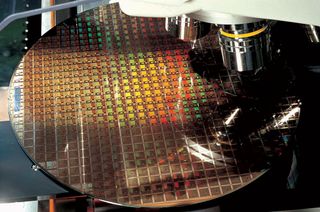

Microchips, microprocessors, CPU, GPUs, or whatever you want to call them are fundamentally similar. They're all made from semiconductors, typically on 300mm silicon wafers, which in turn are cut from a large silicon crystal cylinder. Those silicon cylinders are where everything starts, and they're grown from a seed crystal dipped into a molten vat of nearly pure silicon. That 'nearly' bit is important, however, as small impurities in the wafers can lead to errors or non-functional parts.

With microscopic features, even a small particle can be a big deal. Think about the term nanometer for a moment. A human hair is anywhere from about 20 to 180 micrometers thick, or in other words 20,000 to 180,000 nanometers. The feature size on the latest AMD and Intel processors meanwhile is around 7nm to 70nm (depending on which specific feature we're talking about). Even a tiny speck of dust measures 2,000-5,000 nm, and it's why all of the manufacturing of processors is done in clean rooms. But even then, a few particles may slip through the filters.

Dust and other contaminants aren't the only problem, however. The crystalline structure of the silicon wafer also varies slightly. It's supposed to be a perfect crystal lattice, but the growing process results in a few irregularities. Chips from the center of a wafer tend to be 'better' than those near the edge, meaning fewer irregularities that might affect things like the voltage required to hit a certain clockspeed.

Because of the variability and potential for defects, rather than having one failed circuit ruin the whole chip, modern microchips—especially CPUs and GPUs—have redundancies built into the design. This makes it possible to disable portions of a chip, with anywhere from a handful to potentially hundreds of sections that can be turned on or off as needed. A functioning chip, even if it's slower or missing a few extra features, is better than no chip at all.

Consider AMD's new Ryzen CPUs as an example, where everything from the Ryzen 5 3600 up through the Ryzen 9 3950X is built using the same 8-core CCD (Core Chipset Die) that measures 74mm^2. The 3600/3600X and 3900X all use CCDs with only six cores enabled, while the 3700X/3800X and 3950X use CCDs with all eight cores enabled. Why would AMD intentionally disable two CPU cores in each CCD on some parts? To improve chip yields and overall profitability.

Each silicon wafer contains potentially hundreds of chips, but not every chip from a wafer behaves the same. After the initial manufacturing process, each wafer gets cut into individual chips. These chips are then tested to determine how good each is in a process called binning. Some may work perfectly, others may be effectively useless (too many bad spots), while many will fall somewhere in between those extremes.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The percentage of functioning chips is the yield. Most companies won't directly report their chip yield, though rumors and estimates suggest that Intel's first generation 10nm process (for the aborted Cannon Lake CPUs, never mind the Core i3-8121U) had yields in the single digits. In other words, less than 10 percent of the potential 10nm processors were viable—and that's after Intel disabled the Gen10 graphics, and with a small dual-core die! In contrast, it's estimated that with the harvesting of partially functioning dies, yields for AMD's new Zen 2 parts may be 85 percent or higher.

This is important because each wafer that's produced has a relatively fixed cost—perhaps $7,000-$10,000 or more. This is also why traditionally smaller chips are preferable to larger chips, since more chips can fit within the space of a wafer. A small 74mm^2 chip like Zen 2 has a potential of nearly 800 chips per wafer, while Intel's 10-core Skylake-X chips are around 322mm^2 and only fit about 170 chips per wafer. With a defect density of 0.1 per cm^2, the 10-core Skylake-X might lose up to a third of the potential chips (without harvesting), while the Zen 2 CCD would only have about 8 percent defective chips.

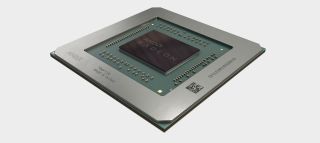

Binning addresses multiple aspects of the chip. First there's the harvesting of partially functioning dies—so an RTX 2060 that uses the same TU106 GPU as the 2070 only has 30 out of a potential 36 SMs enabled, as well as two memory controllers disabled. Binning also determines the ideal voltage and frequency, and sometimes a chip that is otherwise fully functional might get downgraded simply because it can't run at the desired speed. AMD's RX 5700 and RX 5700 XT use the same Navi 10 GPU, but besides having 256 fewer cores, the 5700 is clocked about 150-200MHz lower. Conversely, the RX 5700 XT 50th Anniversary Edition gets a higher binned chip that increases the base and boost clocks by 75MHz.

In short, the best chips get sold as the fastest, most expensive parts. Meanwhile, the functional but perhaps not quite as good chips are sold as lower tier parts. Depending on how intensive and accurate the binning process is, the gap between the 'best' and 'worst' functional chips from a wafer may be relatively small. An established process node like TSMC's 12/16nm or Intel's 14nm++ generally gets very good yields and relatively similar performing parts. Newer manufacturing nodes like TSMC's 7nm or Intel's upcoming 10nm (round two) meanwhile generally have a larger gap between 'good' and 'mediocre' parts.

I spoke about this a bit in the Radeon RX 5700 review, but typically all of the above means you won't hit the same clockspeeds on second or third tier products, no matter how hard you try to overclock. It's something I've seen in CPUs as well. My Intel Core i9-9900K sample hits 5.1GHz on all cores (with sufficient cooling), or 5.0GHz with a modest liquid cooling solution. The i7-9700K and i5-9600K samples meanwhile top out at 4.9GHz. Sure, it's only 100MHz, but that's a $150 difference for what is otherwise the same chip. AMD's Ryzen processors show similar behavior, with the 3900X able to hit slightly higher clockspeeds than the 3700X and 3600X.

There's a catch, however. Sometimes you can get lucky and win the silicon lottery with a great chip sold as a second-tier part. After binning and harvesting of chips, sometimes a company will simply have too many 'good' chips and not enough 'lesser' chips. Since the difference in performance between the first and second tier parts may only be 5-10 percent, but the price difference might be 20 percent or more, customers generally buy more of the less expensive chips. If there aren't enough 'bad' chips available, some of the higher quality parts get downgraded and sold as a less expensive part.

In the past, it was sometimes possible to reverse the downgrading—AMD had 2-core and 3-core CPUs in the past where you could potentially turn them into a working 4-core part if you got lucky. That's generally not possible any longer, as the extra cores on CPUs and GPUs are disabled in such a way that they can't be turned back on, but you can still get lucky with a part that overclocks better. There are even companies that will pre-test CPUs and sell the 'special' chips at a higher price, effectively eliminating the lottery aspect for a fee.

If all you want is a PC that works, you probably don't need to know any of the above details. You get what you paid for, and the fact that your CPU might have two cores disabled doesn't really matter. For the PC enthusiast, on the other hand, it can help determine which parts to buy in order to build the ultimate rig, and why some parts overclock better than others. It also helps explain why larger CPUs and GPUs are so freaking expensive. Yes, Nvidia makes a lot more revenue from an RTX 2080 Ti than an RTX 2060, but the 2080 Ti easily costs more than twice as much to create.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular