Hitman 2 performance analysis: what you need for 60fps

We've tested numerous graphics cards, CPUs, and notebooks to find out what it takes to get the most out of Hitman 2.

Hitman 2 is essentially more of its predecessor, which is no bad thing. However IO Interactive has certainly made it look a bit more impressive, with denser crowds and some extra bells and whistles. But how well does it run compared to 2016's Hitman?

A major talking point here is that DX12 was initially dropped for the new game, which is odd considering it was in the previous version. It's even more confusing considering Hitman was one of the best DX12 implementations. DX12 support was later restored, after many months, but these tests were all run prior to that patch. It speaks to the amount of extra work maintaining two separate APIs requires, but we'll likely see more games supporting both DX11 and DX12 for quite a while.

In terms of hardware, most mainstream GPUs (GTX 1050 Ti / RX 570 and above) can run Hitman 2 at 1080p and 60fps or more, and while you'll also benefit from a faster CPU in some areas (especially larger crowds), just about any CPU from the past five years should suffice.

As for features, Hitman 2 does okay, with some minor omissions. There are 10 graphics settings to tweak, but no global presets. You can cap the frame rate at 30 or 60 FPS (with Vsync), or disable Vsync and run fully unlocked. There's also no option to change the field of view, though it does auto-adjust based on your resolution. Similarly, you can play the game in 21:9 aspect ratio but you'll get black bars on cutscenes. You can rebind keys to your heart's content, but if you're using a controller you'll have to use the default settings. And finally, mod support is not present in any capacity, so outside of hacks and reshade mods we don't expect to see much in the way of user generated content.

Let's dig into the settings a bit more.

Swipe for RX 580 as well as images and screenshots

Swipe for GTX 1060 as well as images and screenshots

Hitman 2 settings overview

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Hitman 2 on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops—see below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

Hitman 2 offers 10 graphics settings, without really giving you an idea of how your performance will be affected if you change them around. We've tested them all using both a GTX 1060 6GB and an RX 580 8GB, with the game running at 1080p.

At Ultra, the RX 580 has a slight edge over the GTX 1060, and that trend continues as you work your way through the various settings and compare them to the maximum. We've set each of the individual options to the minimum value as noted below, to see how performance is (or isn't) affected.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Level of Detail Low: You'll see about a seven percent increase in performance by turning the level of detail down, but of course it will decrease the quality of the visuals in the environment.

Texture Quality Low: Another pretty self explanatory one, turning this down means you'll see lower resolution textures, giving you around a three percent boost. Generally not necessary unless you have a card with 2GB or less VRAM.

Texture Filter Trilinear: Texture filtering is how an 2D image is displayed on a 3D model. Bilinear is the simplest, and mipmapping uses lower resolution textures for distant objects. Trilinear filtering smooths the transition between mipmaps, keeping the quality up when you're looking at far away things, although it will still be a little blurry. Anisotropic filtering is a higher quality mode and looks a bit better. Opting for trilinear gives about a three percent boost in performance.

SSAO Off: Screen space ambient occlusion is an approximation of ambient occlusion used in real-time rendering. Essentially it tries to determine which parts of a scene shouldn't be exposed to as much light as others. Sometimes it's great, sometimes SSAO gives things an ugly looking 'anti-glow.' Turning it off here gives you a 10 percent performance boost.

Shadow Quality Low: This is the biggest factor in performance when talking about individual settings. It affects the quality of soft shadows and the distance for detailed shadows, but you'll see a pretty handy 12 percent increase in performance.

Screenspace Shadows Off: This one won't give you as much of a boost as simply turning the quality down. You'll get about a five percent increase.

Reflection Quality Off: Simply put, this option makes reflections in mirrors and water look better. Plus, turning it off will barely change your performance at all based on our testing (which may not have had 'enough' reflective surfaces).

Motion Blur Off: Turning motion blur off hardly affects performance, so if you like the effect feel free to leave it enabled.

Dynamic Sharpening Off: This counters effects caused by temporal anti-aliasing, and in testing we saw a slight dip on the RX 580 but a slight increase on the GTX 1060 (but basically margin of error).

Simulation Quality Best: Same story here, although the actual dips and boosts in performance are fairly negligible. This setting improves the amount and fidelity of crowds, cloth, destruction, and the particles system, depending on your CPU, and it affects audio. Slower or older CPUs may benefit more when turning this to 'base,' but we saw little difference in testing.

MSI provided nearly all of the hardware for this testing, including all of the graphics cards. The main test system uses MSI's Z370 Gaming Pro Carbon AC with a Core i7-8700K as the primary processor, and 16GB of DDR4-3200 CL14 memory from G.Skill. For the Core i9-9900K, testing was performed on the MSI Z390 MEG Godlike motherboard, using the same memory. I also tested performance with Ryzen processors on MSI's X370 Gaming Pro Carbon. The game is run from a Samsung 860 Evo SSD for all desktop GPUs.

MSI also provided three of its gaming notebooks for testing, the GS63VR with GTX 1060 6GB, GE63VR with GTX 1070, and GT73VR with GTX 1080. The GS63VR has a 4Kp60 display, the GE63VR has a 1080p120 G-Sync display, and the GT73VR has a 1080p120 G-Sync display. For the laptops, I installed the game to the secondary HDD storage.

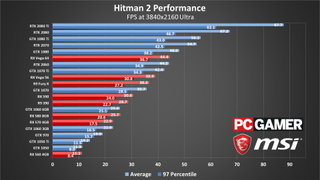

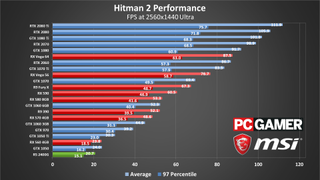

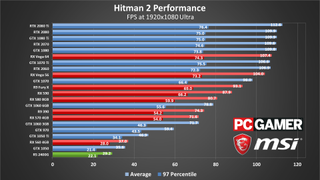

Hitman 2 graphics card benchmarks

Most of the graphics card testing is as you would expect, with the beefy Nvidia cards, which dominate our best graphics cards list, coming out on top at all resolutions and graphics settings. The super expensive RTX 2080 Ti really shows how good it is at 4k with maximum ('ultra') quality still easily breaking 60fps. Everything else struggles while it still chugs along quite nicely. It beats out the RTX 2080 by around 24 percent, and then it's another steep drop down to the 1080 Ti and beyond.

The best AMD can manage at these settings is the RX Vega 64, which falls between the GTX 1070 Ti and GTX 1080, but the RTX 2080 Ti gives you a performance increase of a whopping 50 percent. For about triple the price.

Unless you're breaking the bank on a graphics card, you'll probably want to stay away from this resolution and graphics setting.

Ultra at 2560x1440 makes for slightly better reading, although the cards closer to the budget end of the scale won't handle this very well either. The 2080 Ti comes out on top, of course, but it's only a small step down to the RTX 2080. We're starting to hit CPU limits as well, even with an overclocked i7-8700K.

The RX Vega 64 sits between the GTX 1070 Ti and 1080 again, while the 580/570 cards slightly outperform their 1060 counterparts. If you're looking for this resolution and graphical quality above 60fps, you'll want at least an RX 590 or above (and perhaps tweak your settings slightly).

Down to 1920x1080 then, and the gaps start to close up, particularly at the top. Everything from the GTX 1080 up to the RTX 2080 performs basically the same, with a couple of percent increase in performance for the 2080 Ti. The RX Vega 64 isn't far behind either. Basically, we're hitting CPU limitations.

Just about all of the cards run above 60 FPS just fine. You'll have to go back a generation or two or find a budget card not to hit that mark. Even the GTX 1060 3GB handles it fine.

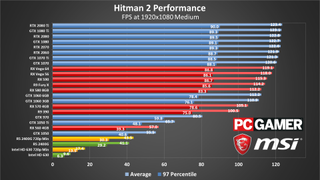

Drop the graphics settings to 'medium' (we use the 'medium' or 'moderate' setting where available, with SSAO and screenspace shadows off) and most cards will run Hitman 2 like a dream. However, the budget GPUs like thethe GTX 1050 / 1050 Ti and RX 560 still struggle with maintaining 60fps.

Elsewhere, our test sequence clearly hits the CPU harder, as from the GTX 1060 3GB right up to the RTX 2080 Ti, you'll only find about a 10 percent performance difference.

For integrated graphics solutions, the Ryzen 5 2400G with Vega 11 Graphics does reasonably well, averaging 40fps. Oddly, dropping the resolution to 720p and minimum quality doesn't really help it run any faster, so it's bumping other bottlenecks. Intel's HD Graphics 630 meanwhile comes up well short of being playable, mustering just 17fps even at minimum quality and 720p.

Hitman 2 CPU Performance

For CPU testing we're using the MSI RTX 2080 Duke. A powerful graphics card like this will best highlight the performance potential for each of the CPUs we tested.

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

At 3840x2160 ultra, all the CPUs handle it around the same, with only a three or four percent drop in performance when you compare the Ryzen 5 2600X to the Core i9-9900K. Everything else stays even closer in quality. It's when you start dropping the resolution and the graphics quality that you start to see the big differences come into play.

At 2560x1440 Ultra, the i9-9900K still performs the best, with the Core i7-8700K OC not far behind. The other CPUs then start to see a drop off in quality. The Core i3-8100 is 22 percent worse off compared to its big, big brother. The AMD CPUs fair better than the i3, but there's a big jump up to the Core i5-8400 and the rest of the Intel CPUs at the top of the list.

At 1080p, the Core i7-8700K OC actually jumps above the i9, at both Medium and Ultra. The rest of the list stays in the same order. The AMD CPUs range between around 21 and 25 percent worse performance compared to the i7 at both graphics settings.

Hitman 2 notebook performance

Swipe for 1080p 'ultra'

Swipe for 1080p 'medium'

Over to laptops, it's about as you'd expect, with desktop GPUs outpacing the mobile versions even though they sound beefier on paper. At both medium and ultra, since we're limited to 1080p displays on two of the three laptops, the desktop GTX 1060 outpaces even the GT73VR 1080, and by a pretty huge margin at medium quality.

The desktop 1060 is 25 percent faster at medium, while at ultra the mobile GPUs catch up a bit and the GTX 1060 is about seven percent better. Meanwhile at the bottom of the scale, the GS63VR 1060 starts to struggle a bit and generally does best by turning down a few settings.

Closing thoughts on Hitman 2 performance and hardware requirements

Desktop PC / motherboards

MSI Z390 MEG Godlike

MSI Z370 Gaming Pro Carbon AC

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X470 Gaming M7 AC

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

MSI Aegis Ti3 VR7RE SLI-014US

The GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Gaming Z 8G

MSI GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Ti Gaming 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GE63VR Raider (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

IO Interactive gives the following system requirements, but unfortunately makes no mention of target framerates, resolution, or settings. Based on the specs, I'd assume 1080p 30fps for minimum, and 1080p ultra 60ps for the recommended PC.

MINIMUM:

OS: OS 64-bit Windows 7

Processor: Intel CPU Core i5-2500K 3.3GHz / AMD CPU Phenom II X4 940

Memory: 8GB RAM

Graphics: NVIDIA GeForce GTX 660 / Radeon HD 7870

DirectX: Version 11

Storage: 60GB available space

RECOMMENDED:

OS: OS 64-bit Windows 10

Processor: Intel CPU Core i7-4790 4GHz

Memory: 16GB RAM

Graphics: Nvidia GPU GeForce GTX 1070 / AMD GPU Radeon RX Vega 56 8GB

DirectX: Version 11

Storage: 60GB available space

In terms of 'realistic' system requirements, we recommend a GTX 970 or about for 1080p 'medium' quality at 60fps, while 1440p 'ultra' gamers will want a GTX 1070 or above for 60fps. 4k is mostly the domain of extreme hardware like the RTX 2080 and 2080 Ti, which are the only GPUs to break 60fps averages in our testing. Don't neglect the CPU either, though Core i5-8400 and above will get pretty close to maxing out any current graphics card.

Overall, Hitman 2 is an excellent game, and it garnered our Best Stealth Game of 2018 award. Not surprisingly, performance ends up being very similar to the previous outing in most respects, though the removal of DirectX 12 support still feels weird.

Hitman (2016) saw numerous updates over time that improved performance, including support for multi-GPU under both DX11 and DX12 modes. It's not clear if the engine and environment changed so much that maintaining both APIs was deemed 'too difficult,' or if DX12 was dropped simply because it didn't provide enough of a benefit. Or maybe the switch in publisher from Square Enix to Warner Bros. Interactive is to blame. Whatever the case, it's still an odd change of heart.

I also miss the built-in benchmark mode of the previous release, not because it was absolutely necessary but because it makes it easier for others to compare performance with our results. Left to my own devices, the benchmark sequence consists of running through a large crowd in the Finish Line mission. All of the people likely put more of a strain on the CPU than GPU, which may explain some of the results, and other 'lighter' areas in terms of crowd control may run at higher framerates.

Once again, thanks to MSI for providing the hardware for this testing. I used the latest Nvidia and AMD drivers at the time of publication, Nvidia 417.35 and AMD 18.12.3. Testing was completed in December 2018, so a few months of patches and driver updates have helped to equalize performance. AMD and Nvidia GPUs generally perform about the same, for the various performance levels, though that means AMD still has no alternative to anything above the GTX 1080 / Vega 64. Hopefully that will arrive in 2019.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular