Doom benchmarks: is your graphics card ready?

Many demons were harmed in the making of this article.

Your GPU is doomed… maybe

The good news for gamers everywhere is that Doom is some great fun. Last week, when Doom launched, we took a quick break to check out the new Nightmare graphics setting. The summary, if you missed it, is that there's not a massive difference between Nightmare and Ultra or even High quality. Medium starts to change things up a bit, and Low turns off a lot of shadows, but overall image quality is quite high, even at the Low preset. The corollary is that performance doesn't tend to scale much with lowered quality settings.

CPU: Intel Core i7-5930K @ 4.2GHz

CPU: Intel Core i3-4360 @ 3.7GHz (simulated)

Mobo: Gigabyte GA-X99-UD4

RAM: G.Skill Ripjaws 16GB DDR4-2666

Storage: Samsung 850 EVO 2TB

PSU: EVGA SuperNOVA 1300 G2

CPU cooler: Cooler Master Nepton 280L

Case: Cooler Master CM Storm Trooper

OS: Windows 10 Pro 64-bit

Drivers: AMD Crimson 16.5.2.1 Hotfix, Nvidia 368.16

That leaves the question of what sort of hardware you'll need, or more generally, how well a variety of GPUs run the game. Now it's time to answer that question with our full performance breakdown.

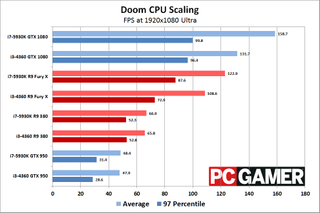

We picked fifteen (now sixteen) cards, including the new GTX 1080, to test with Doom. Our test bed hardware is the same as usual, an overclocked Core i7-5930K running at 4.2GHz. While it's not fully accurate (due to cache and platform differences), we also simulated a Core i3-4360 by disabling all but two of our CPU's cores and running it at 3.7GHz. For CPU scaling, we used the fastest and slowest modern AMD and Nvidia hardware. Armed to the teeth with our graphics arsenal, then, let's find out how well Doom runs.

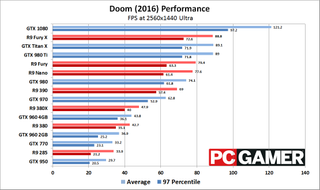

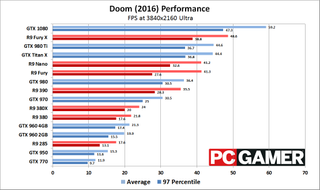

We tested at four settings for this article: 1920x1080 Medium with FXAA is our "light" testing, while 1920x1080, 2560x1440, and 3840x2160 were all run at Ultra settings with SMAA. For CPU scaling, we confined testing to just 1920x1080 at Medium and Ultra quality. Starting with the GPU comparisons, here's how Doom runs:

At our 'light' settings, all of the cards manage to break 60 fps at 1080p. Note that dropping one step further and running at 1080p Low only gains a few more percent, so this is about as light as Doom gets, short of lowering the resolution. The GTX 770 is still rather potent, but even a GTX 750 Ti or similar hardware should still be playable—provided you're only gunning for 30+ fps and not 60+. The fastest GPUs are clearly running into CPU bottlenecks here, though it's not clear if AMD's cards hit that bottleneck earlier or if they're just a bit slower.

Update: I found an old HD 7750 1GB GDDR5 card buried away in my closet. This is literally the slowest GPU I have, short of integrated graphics. The verdict? Not good. 1080p Medium crashed in Doom, but before that happened it was pulling around 15-20 fps at best. 1080p Low managed to run, pulling 19.4 fps average with 8.3 fps minimums. Taking it one step further, 1366x768 at minimum quality (everything "off" or "low") almost managed to be playable, at 36 fps...but it had a weird stutter every second or two, with minimums of 11.3 fps. I don't know if it's the 1GB VRAM, but it's not an enjoyable experience right now.

The HD 7750 is interesting for another reason, however. It has similar specs to the GPU in the fastest current APU, the A10-7890K (and A10-7870K). Both have 512 GCN cores running at around 900MHz (866MHz on the APU), though the 7750 is GCN 1.0 so it may be a bit slower. Considering the 7750 has dedicated GDDR5 memory, I wouldn't expect the APU to match it, let alone exceed it, meaning Doom for now looks to be purely in the domain of dedicated GPUs.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Bump up to 1080p Ultra and the performance hit varies a bit. The fastest GPUs only drop a small amount, around 10-15 percent, while the slower GPUs—those with only 1.5GB or 2GB VRAM—take a more substantial 30-35 percent hit. GTX 1080 meanwhile drops just 1 percent, again showing the CPU bottleneck; the Vulkan API may help alleviate that once the patch is released.

At 1440p Ultra, all graphics cards are fully loaded, though quite a few are still getting well over 60 fps—including the GTX 1080 at 120 fps, which would make an excellent match for a 144Hz QHD display. Most of the GPUs take a 35 percent hit to frame rates moving from 1080p to 1440p, slightly more on the 2GB VRAM cards. Fiji cards (the Fury X, Fury, and Nano) don't drop quite as much thanks to the increased memory bandwidth, and the GTX 1080 is still bumping into CPU limits at times.

Finally, at 4K Ultra settings (not Nightmare, mind you), the number of pixels basically doubles from 1440p, and frame rates on all of the cards take a commensurate 45-50 percent tumble. Even the world's fastest GPU, the GTX 1080, can't quite stay above 60 fps consistently—and for now, there's no SLI to help out, though that's apparently coming.

Crunchy pyres of CPU

All of the above was done with a screaming fast overclocked 6-core processor, of course. What happens if your CPU isn't quite so hot? We decided to dispense with the middle ground and simply drop from 6-core Haswell-E to a simulated dual-core Haswell chip. Basically, any Core i5 processor going back to the i5-2500K should exceed the performance of the i3-4360, so we're aiming pretty low down the totem pole. Turns out, it doesn't really matter that much:

At 1080p Medium, AMD's Fury X takes a moderate 14 percent hit to performance and GTX 1080 loses 7 percent—again, as we noted earlier, it looks like AMD's drivers have more CPU overhead than Nvidia's—but the lower-end GPUs are basically all within the margin of error. Considering most people have at least quad-core i5 processors for their gaming rigs, the CPU shouldn't be a major issue, and Vulkan may serve to further remove it from the performance equation. 1080p Ultra seems to put a bit more strain on the GPU, at least for the GTX 1080, showing a 17 percent drop, while the Fury X shows a 12 percent drop; the 380 and 950 remain within margin of error (less than two percent).

That isn't to say the CPU makes no difference in performance. One thing I immediately noticed with the Core i3 testing is that game and level load times are much higher. The time to launch Doom (I've got the game set to skip the intro videos with the "+com_skipIntroVideo 1" startup parameter) with the Core i7-5930K is around 20 seconds, while the Core i3-4360 requires almost twice as long (38 seconds)—and this is loading from a fast NVMe SSD. A quad-core CPU should make up for most of that difference, but it's something to consider.

And of course, there's the dirty secret of benchmarking: all of this was tested in one area of the game. I've heard later areas in Doom are more taxing, though I've been so busy running the same test over and over that I haven't experience that yet. Multiplayer could also prove more demanding, if it's anything like previous titles, so you'll probably want to give yourself some headroom if you want to stay at silky smooth frame rates.

In the meantime, we're still waiting to see the Vulkan API version of Doom, which was promised "soon after launch." If you're using a 60Hz display and running a decent GPU, short of 4K resolutions it might not matter much, but anyone with a 144Hz display will almost certainly appreciate higher frame rates. We'll be checking back as soon as the Vulkan patch is released to the public—and if we're lucky, we might even get multi-GPU support at the same time.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular