Gamers have a lot to thank Dr. Mark Dean for, and the world's first 1GHz chip is only the start

We talk with the man who helped build the foundations of modern gaming hardware.

Where do you begin when talking with inventor, IBM Fellow, engineer, and professor Dr. Mark Dean? His work on the IBM PC, ISA system bus, and Color Graphics Adapter in the 1980s is surpassed only by the project he led in the early 2000s, breaking the 1GHz clock speed barrier with a chip for the first time.

Dr. Mark Dean earned a BSEE degree from the University of Tennessee in 1979, where he would return as John Fischer Distinguished Professor later in life. He also has an MSEE degree from Florida Atlantic University (1982), and a PhD in Electrical Engineering from Stanford University (1992). In between all that he was breaking convention and working on cutting-edge technologies at IBM.

Dean went on to become CTO of IBM Middle East and Africa, before shifting gears to achieve another lifelong goal of becoming a faculty member at a university, researching neuromorphic computing and advanced computer architectures at The University of Tennessee Knoxville, Tickle College of Engineering's Min H. Kao Department of Electrical Engineering and Computer Science.

Now can you see why it might be difficult to pick a place to start?

Dean's accomplishments are manifold. He holds over 30 patents spanning decades and many of modern computing's pivotal breakthroughs, including those for the IBM PC, the ISA system bus, and even AI neural networks. In 1996, he became the first black IBM Fellow, and later awarded the Black Engineer of the Year President's Award, and was inducted into the National Inventors Hall of Fame.

If it wasn't clear enough already, gamers have a debt of gratitude for Dean's body of work at the cutting-edge of computing. And not just on PC, either: Nintendo, Sony, and Microsoft all used IBM processors at one point or another, made possible by breakthroughs in computing from Dean and his teams.

It's a career any young engineer would undoubtedly aspire to and gaze upon in awe. So when I excitedly sit to talk with Mark Dean, my first thought goes to who has been his inspiration over the years.

"I would have to say my father was my primary inspiration." Dean tells me. "He was always building things. He just had a knack for knowing how things work. He could fix something and not even have the manual that describes the device or engine or whatever he was working on. He built a tractor from scratch when I was just a young boy … My first car was an old '47 Chevrolet and we restored it. And he worked at the Tennessee Valley Authority in their dams, and so he knew a lot about electricity. So I got into electrical components, built an amplifier, you know, started being interested in computers.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"I was wanting to build computers. So I got lucky I was able to do that."

If you'd prefer to listen to the interview, you can do just that through Soundcloud.

Even at a young age Dean had his sights set on the goliath of the tech industry, IBM. While perhaps a spectre of what it once was in consumer-facing electronics today, in the early '70s it was the computing leader and its logo could be found on typewriters, such as the Selectric line; in retail stores; on newly-minted floppy disk drives, a new standard of its own invent; and across vast mainframe computers. It would be a while before it would earn the nickname 'Big Blue'—it would gain that during Dean's tenure in the '80s—but it was well on its way to world wide technological dominance.

"Early Middle School in eighth grade or ninth grade, I decided that I wanted to work for IBM, because at the time IBM was the computer company of choice. They kind of dominated the industry." Dean recalls. "... it was early in my life that I said 'this is what I want to do'. It was just amazing I happened to have the opportunity to interview with IBM when I graduated, and they hired me."

It wasn't long before Dean, as an engineer still in his 20s, was working on some of IBM's most pivotal and successful projects in Boca Raton, Florida, where IBM had its impressively large manufacturing and development facility.

In celebration of Black History Month, we've been speaking to leading black voices in the semiconductor industry. Want to read more? Here's some sage advice from Intel's own Steven Callender, Intel Labs wireless engineer, and Marcus Kennedy, GM of gaming.

One creation that Dean had a hand in creating would even go as far as influencing the name of this very website: the IBM PC was released in 1981, powered by Microsoft's PC-DOS, and popularised the term 'PC'. It was a product that spawned a wave of like-for-like machines from a bunch of fresh-faced computing companies, but it wasn't so clear that the project would take off to those most involved in bringing it to bear.

"We had no clue," Dean recalls. "We were just building systems, we thought we could use and some other people could use. When we built the first IBM PC, we thought we might sell 200,000 in its lifetime. And then we'd go off and build the next thing. So the market at the time, we were selling mostly large mainframes and some some servers, Series/1, and this is back in the day, way back in the day. And so volumes weren't very high. If you built 10,000 machines a year, that was a lot of machines.

"Workstations were the most prevalent, but that was still in the tens of thousands. So building a system imagining you're going to sell millions, there was there was nothing that was selling in the millions, maybe telephones. Even typewriters weren't selling millions. So no, we didn't we didn't have a clue."

From an expectation of 200,000 in its lifetime, IBM would find itself selling "a system-a-minute" every business day.

And the key to its success, Dean believes, was the fact the IBM PC told everyone else how to build it on the back of the tin.

"IBM enabled the industry because we essentially told people how to build exact copy of the IBM PC. That was the linchpin. That was what made IBM PCs unique from the Apple systems at the time. People could build a duplicate, sell a duplicate, and make money selling the duplicate. And that opened up the industry.

"We didn't do it so people per se could copy your machines. We did it just like, and you weren't born at the time," Dean aptly remarks, "in old televisions, the logic backgrounds were always in the back of the television so the repairman could repair the machines. So we thought, okay, our technical reference manual needs to have similar kinds of detail. And it had enough detail to where you could actually build a copy. And that became IBM PC compatible."

An antiquated practice by modern standards. It's difficult to imagine a logic diagram for a modern day PC scrawled on the back of the case, a sentiment Dean agrees with.

"People are more cautious, obviously, about that. But at the time, that's why the industry took off. And that was probably the best decision we ever made."

Yet Dean's role hardly saw him stationary for a moment, even as IBM racked up whopping new revenue as a result of the IBM PC. If you thought of an idea, IBM had the tools to build it, he tells me. It was a playground for young engineers with plenty of drive, and Dean fit the bill perfectly.

"When they hired me I thought, gosh, you know, I didn't tell them this, but if they just gave me a place to sleep and fed me I would've just worked for free because they throw you in a lab with all the equipment, all the parts, anything you would need, to build anything you can imagine," Dean says. "And so it was for me, and I probably was on the fringe, it was pretty much a playground."

Teams of engineers for every project were small and that helped generate a trust and respect that would keep ideas going from light bulb moment to working prototype at rapid pace. The team that worked on the IBM PC was roughly a baker's dozen of some of IBM's finest. The larger teams that Dean was a part of were closer to 25 people at most, and they worked closely together for more than just shared engineering aspirations.

If they just gave me given me a place to sleep and fed me I would've just worked for free.

"We had a policy that every time you got promoted, you had to have a beer party," Dean fondly recalls. "We had this small place called Buds. It was a just a dive in South Florida, that we would have a party at and they would let us order pitchers of beer and we could order out pizza, have them come by. And that's what we would do every time and when anybody got a promotion, you had to fund one of these parties. That was what kept us tight together.

"Yeah, those were good times. And those parties I think made the difference, they kind of made us stay tight, trust each other. It made for the environment that made everything we did possible."

From the IBM PC, Dean would move onto the ISA bus, then known as the AT bus. The AT bus wouldn't be called the ISA bus until rivals Compaq would rename it to avoid infringing IBM's trademarks years later.

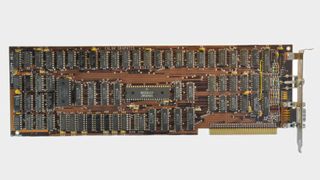

The original PC bus was a project led by Mark Dean for the original IBM PC, but it wasn't until 1984 when the ISA bus patent would be filed under his name and co-inventor Dennis L. Moeller as a part of the IBM PC/AT system launched that same year. This AT system bus would act as precursor to the near-universal PCIe bus common in PCs today, at one point the two would even share motherboards as the industry gradually shifted from the pervasive ISA bus to the newer PCI standard.

At the time, though, the ISA bus was just what was needed to kickstart a whole new world of PC peripherals and add-in cards. You could plug in video cards, network cards, more ports, and storage mediums. Even memory and—don't say old school PCs don't have anything on modern PCs—extra processors could be jacked into an ISA slot.

"That's why we designed it with so much capacity, as we were hoping for many more pluggable devices. In fact, at the time, and I think this is why it lasted, you could also plug memory into the ISA bus. And it would be fast enough to give you reasonable expansion and performance.

"So we had designed it kind of beyond just an I/O bus, it actually had master/slave capability too," Dean explains, using the master/slave terminology that had been the standard in computing for decades but has since given way to better terms. "So you could plug other processors in to the system and create a parallel computer. And so we actually built a few where we had four processor cards, three of them plugged into the ISA bus and it would share that interface and do different things. It led to intelligence, I think, in the cards, so the first intelligent video adapters, graphics adapters, came from the ability to be a master on the ISA bus."

Graphics adapters was one such speciality of Dean's, in fact. IBM had required more advanced graphics for the original PC, but at the time it had nothing capable of delivering colour. Two chips were considered for the role: one from Intel and one from Motorola. After prototyping and testing, it was decided that Motorola 6845 display controller was the better fit and thus begun the process of moulding it into something more suitable for the IBM PC.

The end result was the Color Graphics Adapter, which marked Dean's first intricate design at the time. It was capable of generating 16 colours in total, although not all at the same time, and could connect to both monitor and TV.

A graphics card and the system bus to plug it into—Dean's work has already laid out the foundations of modern computer graphics. Over these few years IBM PC gaming enjoyed a number of releases, such as DONKEY.BAS, a driving game co-created by Bill Gates and Niel Konzen; Microsoft Adventure; and Microsoft Flight Simulator. While arcades whirred to life with Space Invaders towards the end of the '70s and were now offering Pac-Man and Donkey Kong in what's widely considered to be arcade gaming's golden age.

All of which is to say Dean's accomplishments were already proving their worth in the computing market. And those are just the few projects that IBM ended up making and which were huge successes commercially.

"There were projects that you don't hear about that we ended up building. We actually, I claim, were one of the first to build a programmable remote control because the PC Jr. had a cableless keyboard, it was a remote keyboard. And so we knew how to do RF programming, but pointing on an remote to a programmable remote, which we designed and made it work. And so we built one, demoed it, but IBM wasn't in the remote control business.

"We built lots of things," Dean says. "We built an interface to drive a typewriter from the PC. That was something we never marketed but other people did."

Dean went on to work at IBM for another 10 years before finally conceding that he must get a Phd if he were to ever become an IBM Fellow or a faculty member at a university. So, decision made, he told IBM, the company agreed to pay for his education, and he left to study at Stanford with advisors Mark Horowitz and David Dill.

On his return, PhD in hand, Dean would take on a stint working on IBM's PowerPC instruction set architecture (ISA; not to be confused with the bus) before heading up the IBM Austin Research Laboratory.

"And that's where the first gigahertz microprocessor was done," as Dean says.

The Gigahertz breakthrough

Dean recalls that the initial spark had come from some early testing, which had shown that there was potential for a high clock speed chip.

"I think it came from some initial testing that was done with the silicon process that we were working on," he explains. "And the devices seemed to be able to be clocked at that frequency. And the chip sizes were such that we thought we could, at least if we kept the chips small enough, we could run a clock across a chip and actually be able to make it work. We also had just developed enough test tools, so that we could actually measure signals at a gigahertz."

My grandfather would always say that you shoot for the stars to get to the mountaintop.

Yet it had not been done before. From process to architecture to monitoring equipment, all had to be created bespoke for this experiment to be a success.

"It's funny, the silicon industry always thought: 'oh, we'll never build it any faster than that' at the time. I guess we were at 500-600 megahertz," Dean explains. "But I had a small team of engineers who had been playing around with higher speed circuitry… and they said, 'I think we can we can do this'. And I said, 'okay, we're research, so let's try it.' "

Although even Dean admits he had his reservations, but none that would deter him or his team.

"You're always apprehensive, but you can't be afraid. I've learned that if you don't try you'll never get there. You know, my grandfather would always say, well, I think he got this saying from somebody else, but you shoot for the stars to get to the mountaintop. And so we thought, 'okay, we'll shoot for gigahertz', we might get to something a little bit less, but we should at least shoot for gigahertz."

Try and succeed. Dean's team of talented engineers crafted an architecture and CMOS chip that could work claim a gigahertz frequency in action. It required a great deal of work to put together, too, not only in fundamental architectural changes but monitoring and testing capability.

Clocking mechanisms had to be redesigned, circuitry needed to be distributed on chip differently to avoid noise and cross talk. All vastly different to how chips were designed and produced at the time.

"The devices were different. The clocking mechanisms were different. You had to bring in new approaches to how you distributed the circuitry on the chip. You had to worry about noise, cross talk. There was a lot of things you had to worry about. Grounding was key. So we had to do different types of layering of the different layers of silicon on the chip so so that you wouldn't get too much noise that would cut across the signals.

"Fortunately, IBM had some pretty advanced silicon technology." Dean tells me, "and it enabled that but the process architecture also had to be heavily considered. Because at the time it was difficult to mix memory devices and logic devices on the same chip. But we kind of had to, we figured that out."

The first gigahertz chip was a momentous milestone in PC history, and one which helped pave the way for the multi-gigahertz processors available from Intel, AMD, and IBM to this day with its POWER processors, ranging from 2–5GHz speeds. IBM's designs, as Dean notes, were at the forefront of console gaming processors for multiple generations and across most notable gaming brands.

"This team was just top notch researchers, and they hit a gigahertz. And we were really proud of that. And we thought, 'Okay, well, this may lead to something else.' Little did we know that was gonna lead to IBM building game processors for Nintendo, Xbox, and PlayStation."

The GameCube, Xbox 360, Wii, PlayStation 3, and Wii U were all powered by IBM processors or co-designed, IBM-made semi-custom chips.

Yet Dean was nowhere near convinced the job was done, which is something few engineers will willingly admit. In fact, he was resolute about finding an alternative to what he considered a slew of problems that would bite modern processors soon enough: heat, error-prone programming, and slowing silicon advancements.

"I thought we will have to go a different way," Dean says. "Because we always thought that, at some point, we were not going to be able to continue to increase the clock rate. And we actually hit that point, I claim in around 2004. I mean if you look back around 2004 we were at somewhere around three gigahertz, I believe, so three to four. At that time, we stopped being able to just increase clock rate, because right now the systems you buy, they're right around three to four gigahertz. I mean, they can cool them and double clock and blah, blah, blah. But those are all Band-Aids, to be honest. And so the silicon drove most of the advancements in the computer industry.

Something's going to have to change for us to get past this heat problem that we've got.

"Now we talk about, if you look at system performance, now most of this is driven by memory capacity. And the more memory you have, you can get more performance. But processor performance what happens is they put larger caches on, that's still more memory, they put more cores on, but programmers have a tough time writing threaded programmes to leverage all those cores. We have hit kind of the ceiling, basically, on 'raw processor performance.'

"When we moved from bipolar to CMOS," he continues, "this was years ago, but when the mainframes butt up against the max on using bipolar transistors to build computers because they couldn't cool them anymore, they had to switch to CMOS. Well we're at the exact same point, most systems that are built have some form of cooling, significant cooling, even watercooling now just like bipolar had, and we can't get past that. The last supercomputer at Oakridge was a full water-cooled system. And yep, I've seen this happen before, something's going to have to change for us to get past this heat problem that we've got."

There's hardly better example of that than our gaming PCs, in fact. More cores, higher TDPs, and more extreme cooling have become increasingly prominent over the past decade in PC gaming, something Dean refers to as a "brute force kind of approach."

I ask Dean if there are any examples of modern hardware that he finds impressive, or surprised him, but none come to mind.

"I'm always disappointed in how slow technology moves," he tells me.

One such area where Dean is impressed, however, is in game design.

"The one thing that I'm most impressed with the last 10 years is the quality of the characters that people develop for games. My wife and I always talk about how we will get to the point where you can't tell the difference between a animated character, I mean, I shouldn't use that term for that sense, so antiquated, but that's what I'll call them, that this character you create versus a real person, we're close.

"And so I am impressed that there's a lot of progress being made in that area. We'll have to see if it continues, it should. It's just too bad that you're somewhat constrained by needing more and more compute power to make it and more complex programming models to make that work."

For Dean, traditional computing is like a hammer.

"It's just a tool, maybe you get bigger hammer, but it still just does the same thing. Applying it to solve problems in other industries I think is where a lot of the breakthroughs are occurring."

Dean remains an optimist for the future of computing, though, in spite of his want to always take the best thing going and go one step further, or ten.

"Maybe there's something better to take us beyond where we are today," Dean says.

And his hypothesis? That something may lie in artificial intelligence.

"I'm biased towards instead of programming and machines, training it, you know, having it learn how to solve a problem from all past history and knowledge that we have, you know, allow it to learn how to do something versus a static programme. And that way it can be dynamic, it can learn other things and continue to adjust as anomalies pop up, or other things pop up. So from a security standpoint, it might be a much stronger machine, from a flexibility standpoint might be a much better machine."

At UT, Dean's research had been into neuromorphic computing—processors and architectures than mimic the structure of the brain.

"We actually have designed some silicon devices that simulate or mimic neurons and synapses in the brain," Dean explains. "They're not as complex as neurons and synapses in the brain, but we claim you don't need to be that complex. That the brain has its function, but if you're going to do computing, you just need some of the basic functions of a neuron and synapse to actually make it work."

Besides the future of robotics and computers than can smell, in our humble hobby there's clearly a future for AI. From game development to driving new player experiences.

"In relative to gaming, I always thought it'd be neat if you could design a character that would essentially learn from its experience, and has a more free mind," Dean says, pondering on the concept as he elaborates. "You know gaming characters, they tend to, if you're operating in the same method, they'll always respond the same way. It'd be neat if you can make it a little bit more variable, where they would alter their response based on what they've experienced in past parts of the game.

"And outcomes, I guess that might be a problem, because then you would never know what the outcome would be. I don't know if you could sell it. If you wouldn't ever know the true outcome. But you could imagine that the landscapes and the risks the machine would always be variable."

A concept that I'm sure we've all pondered on at some point or another while playing an expansive RPG, yet one that Dean sees as something of an inevitability for gaming, even if we don't yet know how to get there, or what that would look like.

"Who knows what you would end up coming out with? I think that's where we're gonna get to in gaming. And I don't know if that's gonna make it more fun or not."

Dean admits that's just one potential answer to a question that many are exploring ways to solve. From replacements to silicon, such as carbon nanotubes, and cutting-edge research on theories yet to prove successful, such as quantum computing (which IBM is an avid proponent of), all that separates us from the answer is more research and people willing to "think outside the box," Dean tells me.

Innovative ideas and the right approach are the key to breakthroughs, then. Something Dean is very familiar with. I ask him what is the standout eureka moment in his career, yet he's keen to say that he has experienced just as many "negative eurekas", those times when you realised something just wasn't going to work, over the years as positive ones. And that's all a part of the process.

Yet it was the IBM Model 7821 that stands out as one of his best. The team wanted to put a second-level cache on the device, and a big one at that, but no one had done it before.

If you believe in your team, and in the talent, you'll get as far as anybody else is going to get.

"Everybody said, 'No, you can't do it can't do it'. And I remember sitting at home watching a football game was playing I had eureka moment, I know exactly how to do this. And we actually went down that path built it, demoed it. And I remember Intel saying, 'Well, you can't do that', 'How did you do that?' Because we were using their chipset. They said 'No, we've never been able to build that. How did you build that?' And I said, 'Well, you know, we'll tell you later.'

So it was that made that particular system unique in the industry, because nobody else had, we were twice as big as everybody else's caches. And nobody quite could figure out how we actually did it."

Showing up Intel at their own game? I can see why this one has stuck at the forefront of Dean's memory.

With someone as successful as Dean, but also as dynamic, you want to find out the process of how he achieved all that he did, just as much as those things he ended up creating.

"I think the reason I've been a little bit successful is I'm a pretty good generalist. Now, I may not be an expert in much of anything, but I like putting things together, I like being in that middle where you connect the needs of the user with what you can do with technology, and you bring in some new interesting research ideas and build something. And I've never been constrained by thinking it's not gonna work, I always go in and I can make it work.

"Don't be sad that you didn't quite get to where you want to be. Because if you believe in your team, and in the talent, you'll get as far as anybody else is going to get. So if you believe that then you're going to be competitive."

Some modesty on Dean's behalf there, but exceptional advice nonetheless, not just for cutting-edge electrical engineering, either.

When it comes to engineering advice, Dean has plenty of that, too. First, look to booming areas of the industry. Cybersecurity for one, is a hot topic area that Dean is sure will only see rapid expansion in the years to come, but also AI comes back around as the epicentre of engineering research.

"AI continues to grow. I think that's inherent, because we're still collecting more and more data and people are sitting on essentially gold mines. They just don't know how to get to the insights in their data. But if they could figure that out, I mean, they'd create another Amazon or Google.

But if there's one piece of advice that Dean thinks you could get started on immediately, and that's learn to code.

"You can differentiate yourself if you know a little bit of programming, a little bit of Python, a little bit of database management, a little bit of content management. Yes, certain areas can help you differentiate yourself. And it's amazing how much value that brings to bear because because most people they don't have a clue and if you walk in and say 'Yeah I can write a little script that will do XYZ,' they'll say, well, they'll think that's magic."

Dean and I speak a little longer, running over our allotted time, which is something I feel guilty about but doesn't seem to bother Dean one bit. He appears to be only interested in hearing my thoughts on the future of gaming; virtual reality, and augmented reality; and the overdue death of the mouse and keyboard.

"Why are we still tethered to these things I have to hold in my hand?" Dean exclaims.

What's clear from our conversation, though, is that Dean has been propelled through his career by a deep passion for electrical engineering, both as a profession and a hobby; a seemingly unshaken resolve; and what can only be described as a genuine interest in pondering what lies beyond convention.

And so after what was intended to be an article diving into Dean's work with the world's first gigahertz processor, I find myself typing out a profile spanning highlights across his lengthy and career, and hardly breaking the surface. But there's one thread that stands out from my conversation with Dean: there's a lot that gamers have to thank Mark Dean for, that much is abundantly clear, and plenty that the next generation of engineers, technologists, and all comers can learn from his time at the cutting edge of computing.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.

Most Popular