AMD’s Polaris Shoots for the Stars

14/16nm FinFET for GPUs

We already talked about the demonstration of working 14nm FinFET silicon back in December. Of course, working in a controlled demo environment isn’t necessarily the same as 100 percent working for anything you might require; presumably there will be some tweaks and refinements to the drivers and hardware over the coming months as we approach the retail launch. But what is 14nm FinFET and why does it even matter?

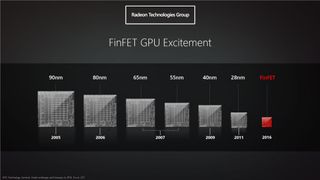

This slide from AMD shows the past decade of graphics process nodes. We went from yearly half-node updates in 2005-2007 to bi-annual full-node updates from 2007-2011…and then we had to wait. There was supposed to be a half-node 20nm update in 2013, which was eventually pushed to 2014, but as you can see, that never happened.

At a high level, one of the big problems with GPUs during the past few years has been the reliance on 28nm manufacturing technology. AMD and Nvidia have used the same core process for five years—a virtual eternity in the rapidly evolving world of computers! The issues consisted of several interrelated elements. First, as noted above, TSMC’s 20nm production was delayed—initially, the plan would have been to launch the new process node about two years after 28nm came online. Once 20nm was ready for production, however, the GPU manufacturers—AMD and Nvidia—found that they just weren’t getting the scaling they expected, which ultimately led to both companies electing to stick with 28nm until the next generation process node was ready.

The reason for the wait is that the next node would move to FinFET, and FinFET helps tremendously with one of the biggest limiting factors in GPUs (and processors in general): leakage. Traditional planar transistors stopped scaling very well beyond 28nm, so even though everything got smaller, leakage actually got worse, with the result being that a 20nm GPU may not have performed much better than a 28nm GPU. And since one of the limiting factors in GPU performance has been power requirements—no one really wants to make a GPU that requires more than 250-275W—we hit a wall.

We’ve seen evidence of this wall from both AMD and Nvidia during the past year. Look at the GTX Titan X (3072 CUDA cores at 1000MHz) and the GTX 980 Ti (2816 cores at 1000MHz), and you’ll find that the 980 Ti is typically within one or two percent of the Titan X, even though the latter has nine percent more processing cores. Either the GPUs are memory bandwidth limited, or they’re running into the 250W power limit—effectively yielding roughly the same performance from both GPUs, despite having more resources. The same thing happened with the R9 Fury X and R9 Fury, where the Fury X has 14 percent more shaders available (4096 vs. 3584 cores) but in terms of performance the Fury X is typically only six percent faster.

So far, we’ve been talking a lot about 14nm FinFET, which means Samsung’s process technology, which was licensed by GlobalFoundries—and GF is the manufacturer of the working Polaris chip we were shown. TSMC is still around, however, only they call their current process 16nm FinFET. There are almost certainly difference between the Samsung and TSMC solutions, but at one point one of AMD’s senior fellows said something to the effect of, “Don’t get too caught up on the 14nm vs. 16nm aspect—they’re just numbers and there may not be as many differences as the numbers would lead you to expect.”

What we know right now is that the low-end Polaris parts will be manufactured by GF (and maybe Samsung?) But RTG was also quick to note that they will still be manufacturing parts with TSMC “when it makes sense.” We don’t expect to see two variants of a single GPU—14nm and 16nm—but it does sound as though AMD will have some of their next-generation GPUs produced by TSMC. Which would be a lot of extra work validating a design for two difference processes, unless 14nm and 16nm are far similar than we think. Anyway, we don’t have any details on other AMD Polaris GPUs yet, and we really don’t even have too many details on Polaris, but it will be interesting to see how AMD rolls out the new GPUs during the coming year.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular