AMD RTG Summit: HDR, Displays, FreeSync, and More

Welcome to the RTG Summit

Earlier this month, AMD hosted their first-ever RTG Summit, replacing the former AMD Technology Summit. (Side note: it's interesting that the branding was all about Radeon; the AMD logo was not seen as far as I can recall.) The subject was all about graphics technologies, and the message was clear: AMD is not resting on its laurels when it comes to graphics. Unfortunately, we can’t discuss all of the information we were presented yet, so this is the first of three parts covering the new and exciting technologies we should see over the coming year. For this first installment, we’re digging into the Visual Technologies, focusing mostly on the displays you’re actually going to look at rather than the hardware generating the pixels.

The RTG Summit is a nice break from the usual presentations in that most of the presentations were from senior engineers rather than marketing. Even better is that we were able to meet with these engineers over the course of two days, to get a deeper understanding of what the technologies mean, and in some cases to provide feedback regarding what we feel are the most important emerging trends and things we’d like to see. So if there’s anything you’d like us to pass back to AMD, let us know in the comments.

Your Current Display Is So 1930

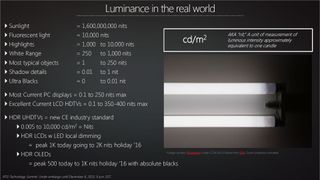

Kicking things off was a discussion of why our displays are holding us back. This has been a long-standing problem, dating back to the creation of many of our early display technologies in the 1930s. At the time, CRT displays were very primitive and offered a very limited range of output. We’ve progressed a lot since those early CRTs, but unfortunately our content and hardware hasn’t really kept pace. When you consider what our eyes see in the real world with what displays can do, there’s a vast chasm:

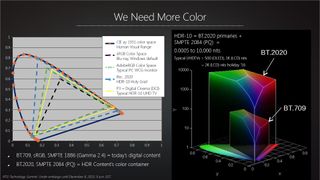

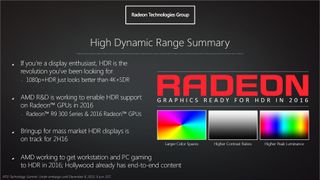

The sRGB color space is still the default in Windows, for example, and it comes nowhere near covering the colors our human eyes can perceive. Other expanded color spaces exist, including AdobeRGB (defined way back in 1998), along with newer standards like P3 and Rec. 2020. These new standards will move us from our limited sRGB outputs to High Dynamic Range (HDR) content, promising a large step forward.

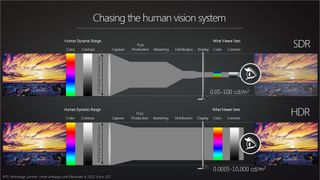

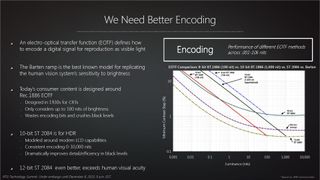

What that means for the coming year is that you’re really going to want a display that can support HDR content. This is different from the HDR rendering seen in games, as internally much of the rendering is done in HDR but the final output still needs to be dumbed down to fit in an SDR panel. This process is called tonemapping, and we’ve been doing it for years. Now we’re going to need to change the algorithms to accommodate displays that natively support HDR content, and then we’re going to need to use those new displays. Hollywood already has end-to-end HDR content and hardware available, and now AMD is working on bringing that same fidelity to PC gaming and workstations in 2016.

The one aspect of HDR that’s a bit concerning is the supported output range. Do we really want displays that can sear our eyeballs with 10,000 nits? I have a laptop that does 450 nits, and at maximum brightness it often feels like way more than I need (though it’s handy outdoors). What we really need is better contrast, not just insane brightness levels. I shudder to think about future retail demo units getting cranked to maximum brightness to demonstrate superiority over displays that “only” reach 1000 nits. The idea is to create more lifelike visuals, but if you’re watching a movie in a dark room, do you really want your display to do a flash-bomb 10,000 nits for on-screen explosions?

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Nvidia's upgrading GeForce Now's $10 tier with 1440p and Ultrawide resolutions, but the only extra Ultimate users get is a new 100-hour play limit

Intel CEO sees 'less need for discrete graphics' and now we're really worried about its upcoming Battlemage gaming GPU and the rest of Intel's graphics roadmap

Most Popular